Math Is Fun Forum

You are not logged in.

- Topics: Active | Unanswered

#1926 2023-10-10 00:23:09

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,724

Re: Miscellany

1930) Biometry

Gist

Biometry is the process of measuring the power of the cornea (keratometry) and the length of the eye, and using this data to determine the ideal intraocular lens power. If this calculation is not performed, or if it is inaccurate, then patients may be left with a significant refractive error.

Summary

The terms “Biometrics” and “Biometry” have been used since early in the 20th century to refer to the field of development of statistical and mathematical methods applicable to data analysis problems in the biological sciences.

Statistical methods for the analysis of data from agricultural field experiments to compare the yields of different varieties of wheat, for the analysis of data from human clinical trials evaluating the relative effectiveness of competing therapies for disease, or for the analysis of data from environmental studies on the effects of air or water pollution on the appearance of human disease in a region or country are all examples of problems that would fall under the umbrella of “Biometrics” as the term has been historically used.

The journal “Biometrics” is a scholarly publication sponsored by a non-profit professional society (the International Biometric Society) devoted to the dissemination of accounts of the development of such methods and their application in real scientific contexts.

The term “Biometrics” has also been used to refer to the field of technology devoted to the identification of individuals using biological traits, such as those based on retinal or iris scanning, fingerprints, or face recognition. Neither the journal “Biometrics” nor the International Biometric Society is engaged in research, marketing, or reporting related to this technology. Likewise, the editors and staff of the journal are not knowledgeable in this area.

Details

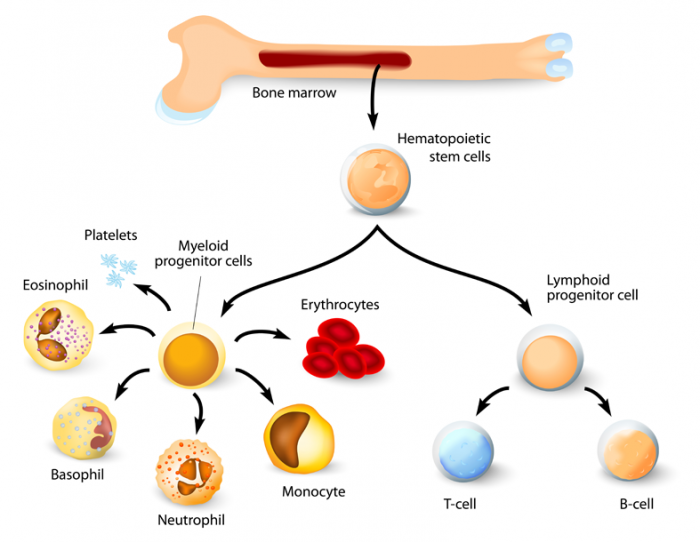

Biostatistics (also known as biometry) is a branch of statistics that applies statistical methods to a wide range of topics in biology. It encompasses the design of biological experiments, the collection and analysis of data from those experiments and the interpretation of the results.

History:

Biostatistics and genetics

Biostatistical modeling forms an important part of numerous modern biological theories. Genetics studies, since its beginning, used statistical concepts to understand observed experimental results. Some genetics scientists even contributed with statistical advances with the development of methods and tools. Gregor Mendel started the genetics studies investigating genetics segregation patterns in families of peas and used statistics to explain the collected data. In the early 1900s, after the rediscovery of Mendel's Mendelian inheritance work, there were gaps in understanding between genetics and evolutionary Darwinism. Francis Galton tried to expand Mendel's discoveries with human data and proposed a different model with fractions of the heredity coming from each ancestral composing an infinite series. He called this the theory of "Law of Ancestral Heredity". His ideas were strongly disagreed by William Bateson, who followed Mendel's conclusions, that genetic inheritance were exclusively from the parents, half from each of them. This led to a vigorous debate between the biometricians, who supported Galton's ideas, as Raphael Weldon, Arthur Dukinfield Darbishire and Karl Pearson, and Mendelians, who supported Bateson's (and Mendel's) ideas, such as Charles Davenport and Wilhelm Johannsen. Later, biometricians could not reproduce Galton conclusions in different experiments, and Mendel's ideas prevailed. By the 1930s, models built on statistical reasoning had helped to resolve these differences and to produce the neo-Darwinian modern evolutionary synthesis.

Solving these differences also allowed to define the concept of population genetics and brought together genetics and evolution. The three leading figures in the establishment of population genetics and this synthesis all relied on statistics and developed its use in biology.

Ronald Fisher worked alongside statistician Betty Allan developing several basic statistical methods in support of his work studying the crop experiments at Rothamsted Research, published in Fisher's books Statistical Methods for Research Workers (1925) and The Genetical Theory of Natural Selection (1930), as well as Allan's scientific papers. Fisher went on to give many contributions to genetics and statistics. Some of them include the ANOVA, p-value concepts, Fisher's exact test and Fisher's equation for population dynamics. He is credited for the sentence "Natural selection is a mechanism for generating an exceedingly high degree of improbability".

Sewall G. Wright developed F-statistics and methods of computing them and defined inbreeding coefficient.

J. B. S. Haldane's book, The Causes of Evolution, reestablished natural selection as the premier mechanism of evolution by explaining it in terms of the mathematical consequences of Mendelian genetics. He also developed the theory of primordial soup.

These and other biostatisticians, mathematical biologists, and statistically inclined geneticists helped bring together evolutionary biology and genetics into a consistent, coherent whole that could begin to be quantitatively modeled.

In parallel to this overall development, the pioneering work of D'Arcy Thompson in On Growth and Form also helped to add quantitative discipline to biological study.

Despite the fundamental importance and frequent necessity of statistical reasoning, there may nonetheless have been a tendency among biologists to distrust or deprecate results which are not qualitatively apparent. One anecdote describes Thomas Hunt Morgan banning the Friden calculator from his department at Caltech, saying "Well, I am like a guy who is prospecting for gold along the banks of the Sacramento River in 1849. With a little intelligence, I can reach down and pick up big nuggets of gold. And as long as I can do that, I'm not going to let any people in my department waste scarce resources in placer mining."

Research planning

Any research in life sciences is proposed to answer a scientific question we might have. To answer this question with a high certainty, we need accurate results. The correct definition of the main hypothesis and the research plan will reduce errors while taking a decision in understanding a phenomenon. The research plan might include the research question, the hypothesis to be tested, the experimental design, data collection methods, data analysis perspectives and costs involved. It is essential to carry the study based on the three basic principles of experimental statistics: randomization, replication, and local control.

Research question

The research question will define the objective of a study. The research will be headed by the question, so it needs to be concise, at the same time it is focused on interesting and novel topics that may improve science and knowledge and that field. To define the way to ask the scientific question, an exhaustive literature review might be necessary. So the research can be useful to add value to the scientific community.

Hypothesis definition

Once the aim of the study is defined, the possible answers to the research question can be proposed, transforming this question into a hypothesis. The main propose is called null hypothesis (H0) and is usually based on a permanent knowledge about the topic or an obvious occurrence of the phenomena, sustained by a deep literature review. We can say it is the standard expected answer for the data under the situation in test. In general, HO assumes no association between treatments. On the other hand, the alternative hypothesis is the denial of HO. It assumes some degree of association between the treatment and the outcome. Although, the hypothesis is sustained by question research and its expected and unexpected answers.

As an example, consider groups of similar animals (mice, for example) under two different diet systems. The research question would be: what is the best diet? In this case, H0 would be that there is no difference between the two diets in mice metabolism (H0: μ1 = μ2) and the alternative hypothesis would be that the diets have different effects over animals metabolism (H1: μ1 ≠ μ2).

The hypothesis is defined by the researcher, according to his/her interests in answering the main question. Besides that, the alternative hypothesis can be more than one hypothesis. It can assume not only differences across observed parameters, but their degree of differences (i.e. higher or shorter).

Sampling

Usually, a study aims to understand an effect of a phenomenon over a population. In biology, a population is defined as all the individuals of a given species, in a specific area at a given time. In biostatistics, this concept is extended to a variety of collections possible of study. Although, in biostatistics, a population is not only the individuals, but the total of one specific component of their organisms, as the whole genome, or all the sperm cells, for animals, or the total leaf area, for a plant, for example.

It is not possible to take the measures from all the elements of a population. Because of that, the sampling process is very important for statistical inference. Sampling is defined as to randomly get a representative part of the entire population, to make posterior inferences about the population. So, the sample might catch the most variability across a population. The sample size is determined by several things, since the scope of the research to the resources available. In clinical research, the trial type, as inferiority, equivalence, and superiority is a key in determining sample size.

Experimental design

Experimental designs sustain those basic principles of experimental statistics. There are three basic experimental designs to randomly allocate treatments in all plots of the experiment. They are completely randomized design, randomized block design, and factorial designs. Treatments can be arranged in many ways inside the experiment. In agriculture, the correct experimental design is the root of a good study and the arrangement of treatments within the study is essential because environment largely affects the plots (plants, livestock, microorganisms). These main arrangements can be found in the literature under the names of "lattices", "incomplete blocks", "split plot", "augmented blocks", and many others. All of the designs might include control plots, determined by the researcher, to provide an error estimation during inference.

In clinical studies, the samples are usually smaller than in other biological studies, and in most cases, the environment effect can be controlled or measured. It is common to use randomized controlled clinical trials, where results are usually compared with observational study designs such as case–control or cohort.

Data collection

Data collection methods must be considered in research planning, because it highly influences the sample size and experimental design.

Data collection varies according to type of data. For qualitative data, collection can be done with structured questionnaires or by observation, considering presence or intensity of disease, using score criterion to categorize levels of occurrence. For quantitative data, collection is done by measuring numerical information using instruments.

In agriculture and biology studies, yield data and its components can be obtained by metric measures. However, pest and disease injuries in plats are obtained by observation, considering score scales for levels of damage. Especially, in genetic studies, modern methods for data collection in field and laboratory should be considered, as high-throughput platforms for phenotyping and genotyping. These tools allow bigger experiments, while turn possible evaluate many plots in lower time than a human-based only method for data collection. Finally, all data collected of interest must be stored in an organized data frame for further analysis.

Additional Information

Biometry is a test to measure the dimension of the eyeball. This include the curvature of the cornea, the length of the eyeball.

The modern biometry machine commonly uses laser interferometry technology to measure the length of the eyeball with very high precision. However, some very mature cataract can be difficult to measure with this machine, a more traditional A-scan ultrasound method may need to be used.

How to perform the test?

The test is performed with the patient resting his/her chin on the chin-rest of the machine. They are required to look at an target light and keep their heads very still. The test only take 5 minutes to complete. However, in patients with more mature cataracts, more measurements must be taken to ensure accuracy, hence the test may take longer than usual to perform.

In patients with very mature cataracts, the patients will need to undergo another test with using an ultrasound scan probe gently touching the front of the eyes for a few seconds.

How to calculate the lens implant power?

The data from the biometry results are fed into a few lens implant power calculation forumlae. The commonest formulae including the SRK-T, Hoffer-Q, and the Hagis.

The results will be assessed by the surgeons to decide on the artificial lens power that can produce the target refraction (glasses prescription) after the operation.

Do I need to wear glasses after a cataract operation?

In most cataract operation, the surgeons will aim to achieve for patients to focus at distance (emmetropia). Therefore, most patient should be able to walk about or even drive without the need of glasses. Standard lens implant cannot change the focus, so patients will need to wear reading glasses for reading. Unless the patient opt for an multifocal lens implant.

Some patients may want to keep being short-sighted, therefore reading without the need of glasses but wear glasses for distance. Some patients may even want to keep one eye focus for distance and one eye focus for near. These options must be discussed with the surgeons before surgery, so that an agreement can be reached on the desire resulting refraction.

Patients with significant astigmatism (the eye ball is oval shape rather than a perfect round shape) before the operation may still need to wear glasses for distance and near after the cataract operation, as routine cataract operation and standard lens implant do not correct the astigmatism. However, some surgeons may perform special incision technique (limbal relaxing incision) during surgery to reduce the amount of astigmatism or even inserting a toric lens implant to correct some high degree astigmatism. This must be discussed with the surgeons before surgery so that special lens implant can be ordered.

How accurate is the biometry test?

The modern technique of biometry especially with the laser interferometry method is very accurate. Patients can expect more than 90% chance to achieve within 1 diopter of the target refraction.

However, some patients with very long eyeball (very short-sighted) or very short eyeball (very long-sighted), the accuracy is a lot lower. Patients should expect some residual refraction error that will require glasses for both distance and near to see very clear.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1927 2023-10-11 00:05:56

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,724

Re: Miscellany

1931) Siren

Gist

A siren is a device for making a loud warning noise.

Summary

A Siren is a noisemaking device producing a piercing sound of definite pitch. Used as a warning signal, it was invented in the late 18th century by the Scottish natural philosopher John Robison. The name was given it by the French engineer Charles Cagniard de La Tour, who devised an acoustical instrument of the type in 1819. A disk with evenly spaced holes around its edge is rotated at high speed, interrupting at regular intervals a jet of air directed at the holes. The resulting regular pulsations cause a sound wave in the surrounding air. The siren is thus classified as a free aerophone. The sound-wave frequency of its pitch equals the number of air puffs (or holes times number of revolutions) per second. The strident sound results from the high number of overtones (harmonics) present.

Details

A siren is a loud noise-making device. Civil defense sirens are mounted in fixed locations and used to warn of natural disasters or attacks. Sirens are used on emergency service vehicles such as ambulances, police cars, and fire engines. There are two general types: mechanical and electronic.

Many fire sirens (used for summoning volunteer firefighters) serve double duty as tornado or civil defense sirens, alerting an entire community of impending danger. Most fire sirens are either mounted on the roof of a fire station or on a pole next to the fire station. Fire sirens can also be mounted on or near government buildings, on tall structures such as water towers, as well as in systems where several sirens are distributed around a town for better sound coverage. Most fire sirens are single tone and mechanically driven by electric motors with a rotor attached to the shaft. Some newer sirens are electronically driven speakers.

Fire sirens are often called "fire whistles", "fire alarms", or "fire horns". Although there is no standard signaling of fire sirens, some utilize codes to inform firefighters of the location of the fire. Civil defense sirens also used as fire sirens often can produce an alternating "hi-lo" signal (similar to emergency vehicles in many European countries) as the fire signal, or attack (slow wail), typically 3x, as to not confuse the public with the standard civil defense signals of alert (steady tone) and fast wail (fast wavering tone). Fire sirens are often tested once a day at noon and are also called "noon sirens" or "noon whistles".

The first emergency vehicles relied on a bell. Then in the 70s, they switched to a duotone airhorn, which was itself overtaken in the 80s by an electronic wail.

History

Some time before 1799, the siren was invented by the Scottish natural philosopher John Robison. Robison's sirens were used as musical instruments; specifically, they powered some of the pipes in an organ. Robison's siren consisted of a stopcock that opened and closed a pneumatic tube. It was apparently driven by the rotation of a wheel.

In 1819, an improved siren was developed and named by Baron Charles Cagniard de la Tour. De la Tour's siren consisted of two perforated disks that were mounted coaxially at the outlet of a pneumatic tube. One disk was stationary, while the other disk rotated. The rotating disk periodically interrupted the flow of air through the fixed disk, producing a tone. De la Tour's siren could produce sound under water, suggesting a link with the sirens of Greek mythology; hence the name he gave to the instrument.

Instead of disks, most modern mechanical sirens use two concentric cylinders, which have slots parallel to their length. The inner cylinder rotates while the outer one remains stationary. As air under pressure flows out of the slots of the inner cylinder and then escapes through the slots of the outer cylinder, the flow is periodically interrupted, creating a tone. The earliest such sirens were developed during 1877–1880 by James Douglass and George Slight (1859–1934) of Trinity House; the final version was first installed in 1887 at the Ailsa Craig lighthouse in Scotland's Firth of Clyde. When commercial electric power became available, sirens were no longer driven by external sources of compressed air, but by electric motors, which generated the necessary flow of air via a simple centrifugal fan, which was incorporated into the siren's inner cylinder.

To direct a siren's sound and to maximize its power output, a siren is often fitted with a horn, which transforms the high-pressure sound waves in the siren to lower-pressure sound waves in the open air.

The earliest way of summoning volunteer firemen to a fire was by ringing of a bell, either mounted atop the fire station, or in the belfry of a local church. As electricity became available, the first fire sirens were manufactured. In 1886 French electrical engineer Gustave Trouvé, developed a siren to announce the silent arrival of his electric boats. Two early fire siren manufacturers were William A. Box Iron Works, who made the "Denver" sirens as early as 1905, and the Inter-State Machine Company (later the Sterling Siren Fire Alarm Company) who made the ubiquitous Model "M" electric siren, which was the first dual tone siren. The popularity of fire sirens took off by the 1920s, with many manufacturers including the Federal Electric Company and Decot Machine Works creating their own sirens. Since the 1970s, many communities have since deactivated their fire sirens as pagers became available for fire department use. Some sirens still remain as a backup to pager systems.

During the Second World War, the British civil defence used a network of sirens to alert the general population to the imminence of an air raid. A single tone denoted an "all clear". A series of tones denoted an air raid.

Types:

Pneumatic

The pneumatic siren, which is a free aerophone, consists of a rotating disk with holes in it (called a chopper, siren disk or rotor), such that the material between the holes interrupts a flow of air from fixed holes on the outside of the unit (called a stator). As the holes in the rotating disk alternately prevent and allow air to flow it results in alternating compressed and rarefied air pressure, i.e. sound. Such sirens can consume large amounts of energy. To reduce the energy consumption without losing sound volume, some designs of pneumatic sirens are boosted by forcing compressed air from a tank that can be refilled by a low powered compressor through the siren disk.

In United States English language usage, vehicular pneumatic sirens are sometimes referred to as mechanical or coaster sirens, to differentiate them from electronic devices. Mechanical sirens driven by an electric motor are often called "electromechanical". One example is the Q2B siren sold by Federal Signal Corporation. Because of its high current draw (100 amps when power is applied) its application is normally limited to fire apparatus, though it has seen increasing use on type IV ambulances and rescue-squad vehicles. Its distinct tone of urgency, high sound pressure level (123 dB at 10 feet) and square sound waves account for its effectiveness.

In Germany and some other European countries, the pneumatic two-tone (hi-lo) siren consists of two sets of air horns, one high pitched and the other low pitched. An air compressor blows the air into one set of horns, and then it automatically switches to the other set. As this back and forth switching occurs, the sound changes tones. Its sound power varies, but could get as high as approximately 125 dB, depending on the compressor and the horns. Comparing with the mechanical sirens, it uses much less electricity but needs more maintenance.

In a pneumatic siren, the stator is the part which cuts off and reopens air as rotating blades of a chopper move past the port holes of the stator, generating sound. The pitch of the siren's sound is a function of the speed of the rotor and the number of holes in the stator. A siren with only one row of ports is called a single tone siren. A siren with two rows of ports is known as a dual tone siren. By placing a second stator over the main stator and attaching a solenoid to it, one can repeatedly close and open all of the stator ports thus creating a tone called a pulse. If this is done while the siren is wailing (rather than sounding a steady tone) then it is called a pulse wail. By doing this separately over each row of ports on a dual tone siren, one can alternately sound each of the two tones back and forth, creating a tone known as Hi/Lo. If this is done while the siren is wailing, it is called a Hi/Lo wail. This equipment can also do pulse or pulse wail. The ports can be opened and closed to send Morse code. A siren which can do both pulse and Morse code is known as a code siren.

Electronic

Electronic sirens incorporate circuits such as oscillators, modulators, and amplifiers to synthesize a selected siren tone (wail, yelp, pierce/priority/phaser, hi-lo, scan, airhorn, manual, and a few more) which is played through external speakers. It is not unusual, especially in the case of modern fire engines, to see an emergency vehicle equipped with both types of sirens. Often, police sirens also use the interval of a tritone to help draw attention. The first electronic siren that mimicked the sound of a mechanical siren was invented in 1965 by Motorola employees Ronald H. Chapman and Charles W. Stephens.

Other types

Steam whistles were also used as a warning device if a supply of steam was present, such as a sawmill or factory. These were common before fire sirens became widely available, particularly in the former Soviet Union. Fire horns, large compressed air horns, also were and still are used as an alternative to a fire siren. Many fire horn systems were wired to fire pull boxes that were located around a town, and this would "blast out" a code in respect to that box's location. For example, pull box number 233, when pulled, would trigger the fire horn to sound two blasts, followed by a pause, followed by three blasts, followed by a pause, followed by three more blasts. In the days before telephones, this was the only way firefighters would know the location of a fire. The coded blasts were usually repeated several times. This technology was also applied to many steam whistles as well. Some fire sirens are fitted with brakes and dampers, enabling them to sound out codes as well. These units tended to be unreliable, and are now uncommon.

Physics of the sound

Mechanical sirens blow air through a slotted disk or rotor. The cyclic waves of air pressure are the physical form of sound. In many sirens, a centrifugal blower and rotor are integrated into a single piece of material, spun by an electric motor.

Electronic sirens are high efficiency loudspeakers, with specialized amplifiers and tone generation. They usually imitate the sounds of mechanical sirens in order to be recognizable as sirens.

To improve the efficiency of the siren, it uses a relatively low frequency, usually several hundred hertz. Lower frequency sound waves go around corners and through holes better.

Sirens often use horns to aim the pressure waves. This uses the siren's energy more efficiently by aiming it. Exponential horns achieve similar efficiencies with less material.

The frequency, i.e. the cycles per second of the sound of a mechanical siren is controlled by the speed of its rotor, and the number of openings. The wailing of a mechanical siren occurs as the rotor speeds and slows. Wailing usually identifies an attack or urgent emergency.

The characteristic timbre or musical quality of a mechanical siren is caused because it is a triangle wave, when graphed as pressure over time. As the openings widen, the emitted pressure increases. As they close, it decreases. So, the characteristic frequency distribution of the sound has harmonics at odd (1, 3, 5...) multiples of the fundamental. The power of the harmonics roll off in an inverse square to their frequency. Distant sirens sound more "mellow" or "warmer" because their harsh high frequencies are absorbed by nearby objects.

Two tone sirens are often designed to emit a minor third, musically considered a "sad" sound. To do this, they have two rotors with different numbers of openings. The upper tone is produced by a rotor with a count of openings divisible by six. The lower tone's rotor has a count of openings divisible by five. Unlike an organ, a mechanical siren's minor third is almost always physical, not tempered. To achieve tempered ratios in a mechanical siren, the rotors must either be geared, run by different motors, or have very large numbers of openings. Electronic sirens can easily produce a tempered minor third.

A mechanical siren that can alternate between its tones uses solenoids to move rotary shutters that cut off the air supply to one rotor, then the other. This is often used to identify a fire warning.

When testing, a frightening sound is not desirable. So, electronic sirens then usually emit musical tones: Westminster chimes is common. Mechanical sirens sometimes self-test by "growling", i.e. operating at low speeds.

In music

Sirens are also used as musical instruments. They have been prominently featured in works by avant-garde and contemporary classical composers. Examples include Edgard Varèse's compositions Amériques (1918–21, rev. 1927), Hyperprism (1924), and Ionisation (1931); math Avraamov's Symphony of Factory Sirens (1922); George Antheil's Ballet Mécanique (1926); Dimitri Shostakovich's Symphony No. 2 (1927), and Henry Fillmore's "The Klaxon: March of the Automobiles" (1929), which features a klaxophone.

In popular music, sirens have been used in The Chemical Brothers' "Song to the Siren" (1992) and in a CBS News 60 Minutes segment played by percussionist Evelyn Glennie. A variation of a siren, played on a keyboard, are the opening notes of the REO Speedwagon song "Ridin' the Storm Out". Some heavy metal bands also use air raid type siren intros at the beginning of their shows. The opening measure of Money City Maniacs 1998 by Canadian band Sloan uses multiple sirens overlapped.

Vehicle-mounted:

Approvals or certifications

Governments may have standards for vehicle-mounted sirens. For example, in California, sirens are designated Class A or Class B. A Class A siren is loud enough that it can be mounted nearly anywhere on a vehicle. Class B sirens are not as loud and must be mounted on a plane parallel to the level roadway and parallel to the direction the vehicle travels when driving in a straight line.

Sirens must also be approved by local agencies, in some cases. For example, the California Highway Patrol approves specific models for use on emergency vehicles in the state. The approval is important because it ensures the devices perform adequately. Moreover, using unapproved devices could be a factor in determining fault if a collision occurs.

The SAE International Emergency Warning Lights and Devices committee oversees the SAE emergency vehicle lighting practices and the siren practice, J1849. This practice was updated through cooperation between the SAE and the National Institute of Standards and Technology. Though this version remains quite similar to the California Title 13 standard for sound output at various angles, this updated practice enables an acoustic laboratory to test a dual speaker siren system for compliant sound output.

Best practices

Siren speakers, or mechanical sirens, should always be mounted ahead of the passenger compartment. This reduces the noise for occupants and makes two-way radio and mobile telephone audio more intelligible during siren use. It also puts the sound where it will be useful. A 2007 study found passenger compartment sound levels could exceed 90dB(A).

Research has shown that sirens mounted behind the engine grille or under the wheel arches produces less unwanted noise inside the passenger cabin and to the side and rear of the vehicle while maintaining noise levels to give adequate warnings. The inclusion of broadband sound to sirens has the ability to increase localisation of sirens, as in a directional siren, as a spread of frequencies makes use of the three ways the brain detects a direction of a sound: Interaural level difference, interaural time difference and head-related transfer function.

The worst installations are those where the siren sound is emitted above and slightly behind the vehicle occupants such as cases where a light-bar mounted speaker is used on a sedan or pickup. Vehicles with concealed sirens also tend to have high noise levels inside. In some cases, concealed or poor installations produce noise levels which can permanently damage vehicle occupants' hearing.

Electric-motor-driven mechanical sirens may draw 50 to 200 amperes at 12 volts (DC) when spinning up to operating speed. Appropriate wiring and transient protection for engine control computers is a necessary part of an installation. Wiring should be similar in size to the wiring to the vehicle engine starter motor. Mechanical vehicle mounted devices usually have an electric brake, a solenoid that presses a friction pad against the siren rotor. When an emergency vehicle arrives on-scene or is cancelled en route, the operator can rapidly stop the siren.

Multi-speaker electronic sirens often are alleged to have dead spots at certain angles to the vehicle's direction of travel. These are caused by phase differences. The sound coming from the speaker array can phase cancel in some situations. This phase cancellation occurs at single frequencies, based upon the spacing of the speakers. These phase differences also account for increases, based upon the frequency and the speaker spacing. However, sirens are designed to sweep the frequency of their sound output, typically, no less than one octave. This sweeping minimizes the effects of phase cancellation. The result is that the average sound output from a dual speaker siren system is 3 dB greater than a single speaker system.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1928 2023-10-12 00:02:27

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,724

Re: Miscellany

1932) Wavelength

Gist

Wavelength is the distance between identical points (adjacent crests) in the adjacent cycles of a waveform signal propagated in space or along a wire. In wireless systems, this length is usually specified in meters (m), centimeters (cm) or millimeters (mm).

Summary

Wavelength is the distance between corresponding points of two consecutive waves. “Corresponding points” refers to two points or particles in the same phase—i.e., points that have completed identical fractions of their periodic motion. Usually, in transverse waves (waves with points oscillating at right angles to the direction of their advance), wavelength is measured from crest to crest or from trough to trough; in longitudinal waves (waves with points vibrating in the same direction as their advance), it is measured from compression to compression or from rarefaction to rarefaction. Wavelength is usually denoted by the Greek letter lambda; it is equal to the speed (v) of a wave train in a medium divided by its frequency (f).

Details

Wavelength is the distance between identical points (adjacent crests) in the adjacent cycles of a waveform signal propagated in space or along a wire. In wireless systems, this length is usually specified in meters (m), centimeters (cm) or millimeters (mm). In the case of infrared (IR), visible light, ultraviolet (UV), and gamma radiation (γ), the wavelength is more often specified in nanometers (nm), which are units of {10}^{-9} m, or angstroms (Å), which are units of {10}^{-10} m.

Wavelength is inversely related to frequency, which refers to the number of wave cycles per second. The higher the frequency of the signal, the shorter the wavelength.

A sound wave is the pattern of disturbance caused by the movement of energy traveling through a medium, such as air, water or any other liquid or solid matter, as it propagates away from the source of the sound. A water wave is an example of a wave that involves a combination of longitudinal and transverse motions. An electromagnetic wave is created as a result of vibrations between an electric field and a magnetic field.

Instruments such as optical spectrometers or optical spectrum analyzers can be used to detect wavelengths in the electromagnetic spectrum.

Wavelengths are measured in kilometers (km), meters, millimeters, micrometers (μm) and even smaller denominations, including nanometers, picometers (pm) and femtometers (fm). The latter is used to measure shorter wavelengths on the electromagnetic spectrum, such as UV radiation, X-rays and gamma rays. Conversely, radio waves have much longer wavelengths, reaching anywhere from 1 mm to 100 km, depending on the frequency.

If f is the frequency of the signal as measured in megahertz (MHz) and the Greek letter lambda λ is the wavelength as measured in meters, then:

λ = 300/f

and, conversely:

f = 300/λ

The distance between repetitions in the waves indicates where the wavelength is on the electromagnetic radiation spectrum, which includes radio waves in the audio range and waves in the visible light range.

A wavelength can be calculated by dividing the velocity of a wave by its frequency. This is often expressed as the equation seen here.

λ represents wavelength, expressed in meters. The v is wave velocity, calculated as meters per second (mps). And the f stands for frequency, which is measured in hertz (Hz).

Wave division multiplexing

In the 1990s, fiber optic cable's ability to carry data was significantly increased with the development of wavelength division multiplexing (WDM). This technique was introduced by AT&T's Bell Labs, which established a way to split a beam of light into different wavelengths that could travel through the fiber independently of one another.

WDM, along with dense WDM (DWDM) and other methods, permits a single optical fiber to transmit multiple signals at the same time. As a result, capacity can be added to existing optical networks, also called photonic networks.

The three most common wavelengths in fiber optics are 850 nm, 1,300 nm and 1,550 nm.

Waveforms

Waveform describes the shape or form of a wave signal. Wave is typically used to describe an acoustic signal or cyclical electromagnetic signal because each is similar to waves in a body of water.

There are four basic types of waveforms:

* Sine wave. The voltage increases and decreases in a steady curve. Sine waves can be found in sound waves, light waves and water waves. Additionally, the alternating current voltage provided in the public power grid is in the form of a sine wave.

* Square wave. The square wave represents a signal where voltage simply turns on, stays on for a while, turns off, stays off for a while and repeats. It's called a square wavebecause the graph of a square wave shows sharp, right-angle turns. Square waves are found in many electronic circuits.

* Triangle wave. In this wave, the voltage increases in a straight line until it reaches a peak value, and then it decreases in a straight line. If the voltage reaches zero and starts to rise again, the triangle wave is a form of direct current (DC). However, if the voltage crosses zero and goes negative before it starts to rise again, the triangle wave is a form of alternating current (AC).

* Sawtooth wave. The sawtooth wave is a hybrid of a triangle wave and a square wave. In most sawtooth waves, the voltage increases in a straight line until it reaches its peak voltage, and then the voltage drops instantly -- or almost instantly -- to zero and repeats immediately.

Relationship between frequency and wavelength

Wavelength and frequency of light are closely related: The higher the frequency, the shorter the wavelength, and the lower the frequency, the longer the wavelength. The energy of a wave is directly proportional to its frequency but inversely proportional to its wavelength. That means the greater the energy, the larger the frequency and the shorter the wavelength. Given the relationship between wavelength and frequency, short wavelengths are more energetic than long wavelengths.

Electromagnetic waves always travel at the same speed: 299,792 kilometers per second (kps). In the electromagnetic spectrum, there are numerous types of waves with different frequencies and wavelengths. However, they're all related by one equation: The frequency of any electromagnetic wave multiplied by its wavelength equals the speed of light.

Wavelengths in wireless networks

Although frequencies are more commonly discussed in wireless networking, wavelengths are also an important factor in Wi-Fi networks. Wi-Fi operates at five frequencies, all in the gigahertz range: 2.4 GHz, 3.6 GHz, 4.9 GHz, 5 GHz and 5.9 GHz. Higher frequencies have shorter wavelengths, and signals with shorter wavelengths have more trouble penetrating obstacles like walls and floors.

As a result, wireless access points that operate at higher frequencies -- with shorter wavelengths -- often consume more power to transmit data at similar speeds and distances achieved by devices that operate at lower frequencies -- with longer wavelengths.

Additional Information

In physics and mathematics, wavelength or spatial period of a wave or periodic function is the distance over which the wave's shape repeats. In other words, it is the distance between consecutive corresponding points of the same phase on the wave, such as two adjacent crests, troughs, or zero crossings. Wavelength is a characteristic of both traveling waves and standing waves, as well as other spatial wave patterns. The inverse of the wavelength is called the spatial frequency. Wavelength is commonly designated by the Greek letter lambda (λ). The term "wavelength" is also sometimes applied to modulated waves, and to the sinusoidal envelopes of modulated waves or waves formed by interference of several sinusoids.

Assuming a sinusoidal wave moving at a fixed wave speed, wavelength is inversely proportional to the frequency of the wave: waves with higher frequencies have shorter wavelengths, and lower frequencies have longer wavelengths.

Wavelength depends on the medium (for example, vacuum, air, or water) that a wave travels through. Examples of waves are sound waves, light, water waves and periodic electrical signals in a conductor. A sound wave is a variation in air pressure, while in light and other electromagnetic radiation the strength of the electric and the magnetic field vary. Water waves are variations in the height of a body of water. In a crystal lattice vibration, atomic positions vary.

The range of wavelengths or frequencies for wave phenomena is called a spectrum. The name originated with the visible light spectrum but now can be applied to the entire electromagnetic spectrum as well as to a sound spectrum or vibration spectrum.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1929 2023-10-13 00:10:12

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,724

Re: Miscellany

1933) Toothbrush

Gist

A toothbrush is a small brush with a long handle that you use to clean your teeth.

Details

A toothbrush is an oral hygiene tool used to clean the teeth, gums, and tongue. It consists of a head of tightly clustered bristles, atop of which toothpaste can be applied, mounted on a handle which facilitates the cleaning of hard-to-reach areas of the mouth. They should be used in conjunction with something to clean between the teeth where the bristles of the toothbrush cannot reach - for example floss, tape or interdental brushes.

They are available with different bristle textures, sizes, and forms. Most dentists recommend using a soft toothbrush since hard-bristled toothbrushes can damage tooth enamel and irritate the gums.

Because many common and effective ingredients in toothpaste are harmful if swallowed in large doses and instead should be spat out, the act of brushing teeth is most often done at a sink within the kitchen or bathroom, where the brush may be rinsed off afterwards to remove any debris remaining and then dried to reduce conditions ideal for germ growth (and, if it is a wooden toothbrush, mold as well).

Some toothbrushes have plant-based handles, often bamboo. However, numerous others are made of cheap plastic; such brushes constitute a significant source of pollution. Over 1 billion toothbrushes are disposed of into landfills annually in the United States alone. Bristles are commonly made of nylon (which, while not biodegradable, as plastic is, may still be recycled) or bamboo viscose.

History:

Precursors

Before the invention of the toothbrush, a variety of oral hygiene measures had been used. This has been verified by excavations during which tree twigs, bird feathers, animal bones and porcupine quills were recovered.

The predecessor of the toothbrush is the chew stick. Chew sticks were twigs with frayed ends used to brush the teeth while the other end was used as a toothpick. The earliest chew sticks were discovered in Sumer in southern Mesopotamia in 3500 BC, an Egyptian tomb dating from 3000 BC, and mentioned in Chinese records dating from 1600 BC.

The Indian way of using tooth wood for brushing is presented by the Chinese Monk Yijing (635–713 CE) when he describes the rules for monks in his book: "Every day in the morning, a monk must chew a piece of tooth wood to brush his teeth and scrape his tongue, and this must be done in the proper way. Only after one has washed one's hands and mouth may one make salutations. Otherwise both the saluter and the saluted are at fault. In Sanskrit, the tooth wood is known as the dantakastha—danta meaning tooth, and kastha, a piece of wood. It is twelve finger-widths in length. The shortest is not less than eight finger-widths long, resembling the little finger in size. Chew one end of the wood well for a long while and then brush the teeth with it."

The Greeks and Romans used toothpicks to clean their teeth, and toothpick-like twigs have been excavated in Qin dynasty tombs. Chew sticks remain common in Africa, the rural Southern United States, and in the Islamic world the use of chewing stick miswak is considered a pious action and has been prescribed to be used before every prayer five times a day. Miswaks have been used by Muslims since the 7th century. Twigs of Neem Tree have been used by ancient Indians. Neem, in its full bloom, can aid in healing by keeping the area clean and disinfected. In fact, even today, Neem twigs called datun are used for brushing teeth in India, although not hugely common.

Toothbrush

The first bristle toothbrush resembling the modern one was found in China. Used during the Tang dynasty (619–907), it consisted of hog bristles. The bristles were sourced from hogs living in Siberia and northern China because the colder temperatures provided firmer bristles. They were attached to a handle manufactured from bamboo or bone, forming a toothbrush. In 1223, Japanese Zen master Dōgen Kigen recorded in his Shōbōgenzō that he saw monks in China clean their teeth with brushes made of horsetail hairs attached to an oxbone handle. The bristle toothbrush spread to Europe, brought from China to Europe by travellers. It was adopted in Europe during the 17th century. The earliest identified use of the word toothbrush in English was in the autobiography of Anthony Wood who wrote in 1690 that he had bought a toothbrush from J. Barret. Europeans found the hog bristle toothbrushes imported from China too firm and preferred softer bristle toothbrushes made from horsehair. Mass-produced toothbrushes made with horse or boar bristle continued to be imported to Britain from China until the mid 20th century.

In the UK, William Addis is believed to have produced the first mass-produced toothbrush in 1780. In 1770, he had been jailed for causing a riot. While in prison he decided that using a rag with soot and salt on the teeth was ineffective and could be improved. After saving a small bone from a meal, he drilled small holes into the bone and tied into the bone tufts of bristles that he had obtained from one of the guards, passed the tufts of bristle through the holes in the bone and sealed the holes with glue. After his release, he became wealthy after starting a business manufacturing toothbrushes. He died in 1808, bequeathing the business to his eldest son. It remained within family ownership until 1996. Under the name Wisdom Toothbrushes, the company now manufactures 70 million toothbrushes per year in the UK. By 1840 toothbrushes were being mass-produced in Britain, France, Germany, and Japan. Pig bristles were used for cheaper toothbrushes and badger hair for the more expensive ones.

Hertford Museum in Hertford, UK, holds approximately 5000 brushes that make up part of the Addis Collection. The Addis factory on Ware Road was a major employer in the town until 1996. Since the closure of the factory, Hertford Museum has received photographs and documents relating to the archive, and collected oral histories from former employees.

The first patent for a toothbrush was granted to H.N. Wadsworth in 1857 (U.S.A. Patent No. 18,653) in the United States, but mass production in the United States did not start until 1885. The improved design had a bone handle with holes bored into it for the Siberian boar hair bristles. Unfortunately, animal bristle was not an ideal material as it retained bacteria, did not dry efficiently and the bristles often fell out. In addition to bone, handles were made of wood or ivory. In the United States, brushing teeth did not become routine until after World War II, when American soldiers had to clean their teeth daily.

During the 1900s, celluloid gradually replaced bone handles. Natural animal bristles were also replaced by synthetic fibers, usually nylon, by DuPont in 1938. The first nylon bristle toothbrush made with nylon yarn went on sale on February 24, 1938. The first electric toothbrush, the Broxodent, was invented in Switzerland in 1954. By the turn of the 21st century nylon had come to be widely used for the bristles and the handles were usually molded from thermoplastic materials.

Johnson & Johnson, a leading medical supplies firm, introduced the "Reach" toothbrush in 1977. It differed from previous toothbrushes in three ways: it had an angled head, similar to dental instruments, to reach back teeth; the bristles were concentrated more closely than usual to clean each tooth of potentially cariogenic (cavity-causing) materials; and the outer bristles were longer and softer than the inner bristles. Other manufacturers soon followed with other designs aimed at improving effectiveness. In spite of the changes with the number of tufts and the spacing, the handle form and design, the bristles were still straight and difficult to maneuver. In 1978 Dr. George C. Collis developed the Collis Curve toothbrush which was the first toothbrush to have curved bristles. The curved bristles follow the curvature of the teeth and safely reach in between the teeth and into the sulcular areas.

In January 2003, the toothbrush was selected as the number one invention Americans could not live without according to the Lemelson-MIT Invention Index.

Types of toothbrush:

Multi-sided toothbrushes

A six-sided toothbrush used to brush all sides of the teeth, in both the upper and lower jaw, at the same time.

A multi-sided toothbrush is a fast and easy way to brush the teeth.

Electric toothbrush

It has been discovered that compared to a manual brush, the multi-directional power brush might reduce the incidence of gingivitis and plaque, when compared to regular side-to-side brushing. These brushes tend to be more costly and damaging to the environment when compared to manual toothbrushes. Most studies report performances equivalent to those of manual brushings, possibly with a decrease in plaque and gingivitis. An additional timer and pressure sensors can encourage a more efficient cleaning process. Electric toothbrushes can be classified, according to the speed of their movements as: standard power toothbrushes, sonic toothbrushes, or ultrasonic toothbrushes. Any electric toothbrush is technically a powered toothbrush. If the motion of the toothbrush is sufficiently rapid to produce a hum in the audible frequency range (20 Hz to 20,000 Hz), it can be classified as a sonic toothbrush. Any electric toothbrush with movement faster than this limit can be classified as an ultrasonic toothbrush. Certain ultrasonic toothbrushes, such as the Megasonex and the Ultreo, have both sonic and ultrasonic movements.

There are different electric toothbrush heads designed for sensitive teeth and gums, increased stain removal, or different-sized bristles for tight or gapped teeth. The hand motion with an electric toothbrush is different from a manual toothbrush. They are meant to have the bristles do the work by just placing and moving the toothbrush. Fewer back and forth strokes are needed.

Interdental brush

An interdental or interproximal ("proxy") brush is a small brush, typically disposable, either supplied with a reusable angled plastic handle or an integral handle, used for cleaning between teeth and between the wires of dental braces and the teeth.

The use of interdental brushes in conjunction with tooth brushing has been shown to reduce both the amount of plaque and the incidence of gingivitis when compared to tooth brushing alone. Although there is some evidence that after tooth brushing with a conventional tooth brush, interdental brushes remove more plaque than dental floss, a systematic review reported insufficient evidence to determine such an association.

The size of interdental brushes is standardized in ISO 16409. The brush size, which is a number between 0 (small space between teeth) and 8 (large space), indicates the passage hole diameter. This corresponds to the space between two teeth that is just sufficient for the brush to go through without bending the wire. The color of the brushes differs between producers. The same is the case with respect to the wire diameter.

End-tuft brush

The small round brush head comprises seven tufts of tightly packed soft nylon bristles, trimmed so the bristles in the center can reach deeper into small spaces. The brush handle is ergonomically designed for a firm grip, giving the control and precision necessary to clean where most other cleaning aids cannot reach. These areas include the posterior of the wisdom teeth (third molars), orthodontic structures (braces), crowded teeth, and tooth surfaces that are next to missing teeth. It can also be used to clean areas around implants, bridges, dentures and other appliances.

Chewable toothbrush

A chewable toothbrush is a miniature plastic moulded toothbrush which can be placed inside the mouth. While not commonly used, they are useful to travelers and are sometimes available from bathroom vending machines. They are available in different flavors such as mint or bubblegum and should be disposed of after use. Other types of disposable toothbrushes include those that contain a small breakable plastic ball of toothpaste on the bristles, which can be used without water.

Musical toothbrush

A musical toothbrush is a type of manual or powered toothbrush designed to make tooth brushing habit more interesting. It is more commonly introduced to children to gain their attention and positively influence their tooth brushing behavior. The music starts while child starts brushing, it continuously plays during the brushing and it ends when the child stops brushing.

Tooth brushing:

Hygiene and care

It is not recommended to share toothbrushes with others, since besides general hygienic concerns, there is a risk of transmitting diseases that are typically transmittable by blood, such as Hepatitis C.

After use, it is advisable to rinse the toothbrush with water, shake it off and let the toothbrush dry.

Studies have shown that brushing to remove dental plaque more often than every 48 hours is enough to maintain gum and tooth health. Tooth brushing can remove plaque up to one millimeter below the gum line, and each person has a habitual brushing method, so more frequent brushing does not cover additional parts of the teeth or mouth. Most dentists recommended patients brush twice a day in the hope that more frequent brushing would clean more areas of the mouth. Tooth brushing is the most common preventive healthcare activity, but tooth and gum disease remain high, since lay people clean at most 40% of their tooth margins at the gum line. Videos show that even when asked to brush their best, they do not know how to clean effectively.

Adversity of toothbrushes

Teeth can be damaged by several factors including poor oral hygiene, but also by wrong oral hygiene. Especially for sensitive teeth, damage to dentin and gums can be prevented by several measures including a correct brushing technique.

It is beneficial, when using a straight bristled brush, not to scrub horizontally over the necks of teeth, not to press the brush too hard against the teeth, to choose a toothpaste that is not too abrasive, and to wait at least 30 minutes after consumption of acidic food or drinks before brushing. Harder toothbrushes reduce plaque more efficiently but are more stressful to teeth and gum; using a medium to soft brush for a longer cleaning time was rated to be the best compromise between cleaning result and gum and tooth health.

A study by University College London found that advice on brushing technique and frequency given by 10 national dental associations, toothpaste and toothbrush companies, and in dental textbooks was inconsistent.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1930 2023-10-14 00:47:04

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,724

Re: Miscellany

1934) Shield

Gist

A shield is a broad piece of defensive armor carried on the arm. 2. : something or someone that protects or defends : defense.

Details

A shield is a piece of personal armour held in the hand, which may or may not be strapped to the wrist or forearm. Shields are used to intercept specific attacks, whether from close-ranged weaponry or projectiles such as arrows, by means of active blocks, as well as to provide passive protection by closing one or more lines of engagement during combat.

Shields vary greatly in size and shape, ranging from large panels that protect the user's whole body to small models (such as the buckler) that were intended for hand-to-hand-combat use. Shields also vary a great deal in thickness; whereas some shields were made of relatively deep, absorbent, wooden planking to protect soldiers from the impact of spears and crossbow bolts, others were thinner and lighter and designed mainly for deflecting blade strikes (like the roromaraugi or qauata). Finally, shields vary greatly in shape, ranging in roundness to angularity, proportional length and width, symmetry and edge pattern; different shapes provide more optimal protection for infantry or cavalry, enhance portability, provide secondary uses such as ship protection or as a weapon and so on.

In prehistory and during the era of the earliest civilisations, shields were made of wood, animal hide, woven reeds or wicker. In classical antiquity, the Barbarian Invasions and the Middle Ages, they were normally constructed of poplar tree, lime or another split-resistant timber, covered in some instances with a material such as leather or rawhide and often reinforced with a metal boss, rim or banding. They were carried by foot soldiers, knights and cavalry.

Depending on time and place, shields could be round, oval, square, rectangular, triangular, bilabial or scalloped. Sometimes they took on the form of kites or flatirons, or had rounded tops on a rectangular base with perhaps an eye-hole, to look through when used with combat. The shield was held by a central grip or by straps with some going over or around the user's arm and one or more being held by the hand.

Often shields were decorated with a painted pattern or an animal representation to show their army or clan. These designs developed into systematized heraldic devices during the High Middle Ages for purposes of battlefield identification. Even after the introduction of gunpowder and firearms to the battlefield, shields continued to be used by certain groups. In the 18th century, for example, Scottish Highland fighters liked to wield small shields known as targes, and as late as the 19th century, some non-industrialized peoples (such as Zulu warriors) employed them when waging wars.

In the 20th and 21st century, shields have been used by military and police units that specialize in anti-terrorist actions, hostage rescue, riot control and siege-breaking.

Prehistory

The oldest form of shield was a protection device designed to block attacks by hand weapons, such as swords, axes and maces, or ranged weapons like sling-stones and arrows. Shields have varied greatly in construction over time and place. Sometimes shields were made of metal, but wood or animal hide construction was much more common; wicker and even turtle shells have been used. Many surviving examples of metal shields are generally felt to be ceremonial rather than practical, for example the Yetholm-type shields of the Bronze Age, or the Iron Age Battersea shield.

History:

Ancient

Size and weight varied greatly. Lightly armored warriors relying on speed and surprise would generally carry light shields (pelte) that were either small or thin. Heavy troops might be equipped with robust shields that could cover most of the body. Many had a strap called a guige that allowed them to be slung over the user's back when not in use or on horseback. During the 14th–13th century BC, the Sards or Shardana, working as mercenaries for the Egyptian pharaoh Ramses II, utilized either large or small round shields against the Hittites. The Mycenaean Greeks used two types of shields: the "figure-of-eight" shield and a rectangular "tower" shield. These shields were made primarily from a wicker frame and then reinforced with leather. Covering the body from head to foot, the figure-of-eight and tower shield offered most of the warrior's body a good deal of protection in hand-to-hand combat. The Ancient Greek hoplites used a round, bowl-shaped wooden shield that was reinforced with bronze and called an aspis. The aspis was also the longest-lasting and most famous and influential of all of the ancient Greek shields. The Spartans used the aspis to create the Greek phalanx formation. Their shields offered protection not only for themselves but for their comrades to their left.

The heavily armored Roman legionaries carried large shields (scuta) that could provide far more protection, but made swift movement a little more difficult. The scutum originally had an oval shape, but gradually the curved tops and sides were cut to produce the familiar rectangular shape most commonly seen in the early Imperial legions. Famously, the Romans used their shields to create a tortoise-like formation called a testudo in which entire groups of soldiers would be enclosed in an armoured box to provide protection against missiles. Many ancient shield designs featured incuts of one sort or another. This was done to accommodate the shaft of a spear, thus facilitating tactics requiring the soldiers to stand close together forming a wall of shields.

Post-classical

Typical in the early European Middle Ages were round shields with light, non-splitting wood like linden, fir, alder, or poplar, usually reinforced with leather cover on one or both sides and occasionally metal rims, encircling a metal shield boss. These light shields suited a fighting style where each incoming blow is intercepted with the boss in order to deflect it. The Normans introduced the kite shield around the 10th century, which was rounded at the top and tapered at the bottom. This gave some protection to the user's legs, without adding too much to the total weight of the shield. The kite shield predominantly features enarmes, leather straps used to grip the shield tight to the arm. Used by foot and mounted troops alike, it gradually came to replace the round shield as the common choice until the end of the 12th century, when more efficient limb armour allowed the shields to grow shorter, and be entirely replaced by the 14th century.

As body armour improved, knight's shields became smaller, leading to the familiar heater shield style. Both kite and heater style shields were made of several layers of laminated wood, with a gentle curve in cross section. The heater style inspired the shape of the symbolic heraldic shield that is still used today. Eventually, specialised shapes were developed such as the bouche, which had a lance rest cut into the upper corner of the lance side, to help guide it in combat or tournament. Free standing shields called pavises, which were propped up on stands, were used by medieval crossbowmen who needed protection while reloading.

In time, some armoured foot knights gave up shields entirely in favour of mobility and two-handed weapons. Other knights and common soldiers adopted the buckler, giving rise to the term "swashbuckler". The buckler is a small round shield, typically between 8 and 16 inches (20–40 cm) in diameter. The buckler was one of very few types of shield that were usually made of metal. Small and light, the buckler was easily carried by being hung from a belt; it gave little protection from missiles and was reserved for hand-to-hand combat where it served both for protection and offence. The buckler's use began in the Middle Ages and continued well into the 16th century.

In Italy, the targa, parma, and rotella were used by common people, fencers and even knights. The development of plate armour made shields less and less common as it eliminated the need for a shield. Lightly armoured troops continued to use shields after men-at-arms and knights ceased to use them. Shields continued in use even after gunpowder powered weapons made them essentially obsolete on the battlefield. In the 18th century, the Scottish clans used a small, round targe that was partially effective against the firearms of the time, although it was arguably more often used against British infantry bayonets and cavalry swords in close-in fighting.

During the 19th century, non-industrial cultures with little access to guns were still using war shields. Zulu warriors carried large lightweight shields called Ishlangu made from a single ox hide supported by a wooden spine.[6] This was used in combination with a short spear (iklwa) and/or club. Other African shields include Glagwa from Cameroon or Nguba from Congo.

Modern

Law enforcement shields

Shields for protection from armed attack are still used by many police forces around the world. These modern shields are usually intended for two broadly distinct purposes. The first type, riot shields, are used for riot control and can be made from metal or polymers such as polycarbonate Lexan or Makrolon or boPET Mylar. These typically offer protection from relatively large and low velocity projectiles, such as rocks and bottles, as well as blows from fists or clubs. Synthetic riot shields are normally transparent, allowing full use of the shield without obstructing vision. Similarly, metal riot shields often have a small window at eye level for this purpose. These riot shields are most commonly used to block and push back crowds when the users stand in a "wall" to block protesters, and to protect against shrapnel, projectiles like stones and bricks, molotov math, and during hand-to-hand combat.

The second type of modern police shield is the bullet-resistant ballistic shield, also called tactical shield. These shields are typically manufactured from advanced synthetics such as Kevlar and are designed to be bulletproof, or at least bullet resistant. Two types of shields are available:

* Light level IIIA shields are designed to stop pistol cartridges.

* Heavy level III and IV shields are designed to stop rifle cartridges.

Tactical shields often have a firing port so that the officer holding the shield can fire a weapon while being protected by the shield, and they often have a bulletproof glass viewing port. They are typically employed by specialist police, such as SWAT teams in high risk entry and siege scenarios, such as hostage rescue and breaching gang compounds, as well as in antiterrorism operations.

Law enforcement shields often have a large signs stating "POLICE" (or the name of a force, such as "US MARSHALS") to indicate that the user is a law enforcement officer.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#1931 2023-10-15 00:10:44

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,724

Re: Miscellany

1935) Film Festival

Gist

A film festival is an organized event at which many films are shown.

Summary

Film festival is a gathering, usually annual, for the purpose of evaluating new or outstanding motion pictures. Sponsored by national or local governments, industry, service organizations, experimental film groups, or individual promoters, the festivals provide an opportunity for filmmakers, distributors, critics, and other interested persons to attend film showings and meet to discuss current artistic developments in film. At the festivals distributors can purchase films that they think can be marketed successfully in their own countries.

The first festival was held in Venice in 1932. Since World War II, film festivals have contributed significantly to the development of the motion-picture industry in many countries. The popularity of Italian films at the Cannes and Venice film festivals played an important part in the rebirth of the Italian industry and the spread of the postwar Neorealist movement. In 1951 Kurosawa Akira’s Rashomon won the Golden Lion at Venice, focusing attention on Japanese films. That same year the first American Art Film Festival at Woodstock, New York, stimulated the art-film movement in the United States.

Probably the best-known and most noteworthy of the hundreds of film festivals is held each spring in Cannes, France. Since 1947, people interested in films have gathered in that small resort town to attend official and unofficial showings of films. Other important festivals are held in Berlin, Karlovy Vary (Czech Republic), Toronto, Ouagadougou (Burkina Faso), Park City (Utah, U.S.), Hong Kong, Belo Horizonte (Brazil) and Venice. Short subjects and documentaries receive special attention at gatherings in Edinburgh, Mannheim and Oberhausen (both in Germany), and Tours (France). Some festivals feature films of one country, and since the late 1960s there have been special festivals for student filmmakers. Others are highly specialized, such as those that feature only underwater photography or those that deal with specific subjects, such as mountain climbing.

Details

A film festival is an organized, extended presentation of films in one or more cinemas or screening venues, usually in a single city or region. Increasingly, film festivals show some films outdoors. Films may be of recent date and, depending upon the festival's focus, can include international and domestic releases. Some film festivals focus on a specific filmmaker, genre of film (e.g. horror films), or subject matter. Several film festivals focus solely on presenting short films of a defined maximum length. Film festivals are typically annual events. Some film historians, including Jerry Beck, do not consider film festivals as official releases of the film.

The oldest film festival in the world is the Venice Film Festival. The most prestigious film festivals in the world, known as the "Big Five", are (listed chronologically according to the date of foundation): Venice, Cannes, Berlin (the original Big Three), Toronto, and Sundance.

History

(The Venice Film Festival is the oldest film festival in the world and one of the most prestigious and publicized.)

The Venice Film Festival in Italy began in 1932 and is the oldest film festival still running.

Mainland Europe's biggest independent film festival is ÉCU The European Independent Film Festival, which started in 2006 and takes place every spring in Paris, France. Edinburgh International Film Festival is the longest-running festival in Great Britain as well as the longest continually running film festival in the world.

Australia's first and longest-running film festival is the Melbourne International Film Festival (1952), followed by the Sydney Film Festival (1954).

North America's first and longest-running short film festival is the Yorkton Film Festival, established in 1947. The first film festival in the United States was the Columbus International Film & Video Festival, also known as The Chris Awards, held in 1953. According to the Film Arts Foundation in San Francisco, "The Chris Awards (is) one of the most prestigious documentaries, educational, business and informational competitions in the U.S; (it is) the oldest of its kind in North America and celebrating its 54th year". It was followed four years later by the San Francisco International Film Festival, held in March 1957, which emphasized feature-length dramatic films. The festival played a major role in introducing foreign films to American audiences. Films in the first year included Akira Kurosawa's Throne of Blood and Satyajit Ray's Pather Panchali.

Today, thousands of film festivals take place around the world—from high-profile festivals such as Sundance Film Festival and Slamdance Film Festival (Park City, Utah), to horror festivals such as Terror Film Festival (Philadelphia), and the Park City Film Music Festival, the first U.S. film festival dedicated to honoring music in film.

Film Funding competitions such as Writers and Filmmakers were introduced when the cost of production could be lowered significantly and internet technology allowed for the collaboration of film production.

Film festivals have evolved significantly since the COVID-19 pandemic. Many festivals opted for virtual or hybrid festivals. The film industry, which was already in upheaval due to streaming options, has faced another major shift and movies that are showcased at festivals have an even shorter runway to online launches.

Notable film festivals

A queue to the 1999 Belgian-French film Rosetta at the Midnight Sun Film Festival in Sodankylä, Finland, in 2005.

The "Big Five" film festivals are considered to be Venice, Cannes, Berlin, Toronto and Sundance.

In North America, the Toronto International Film Festival is the most popular festival. Time wrote it had "grown from its place as the most influential fall film festival to the most influential film festival, period".

The Seattle International Film Festival is credited as being the largest film festival in the United States, regularly showing over 400 films in a month across the city.

Competitive feature films

The festivals in Berlin, Cairo, Cannes, Goa, Karlovy Vary, Locarno, Mar del Plata, Moscow, San Sebastián, Shanghai, Tallinn, Tokyo, Venice, and Warsaw are accredited by the International Federation of Film Producers Associations (FIAPF) in the category of competitive feature films. As a rule, for films to compete, they must first be released during the festivals and not in any other previous venue beforehand.

Experimental films

Ann Arbor Film Festival started in 1963. It is the oldest continually operated experimental film festival in North America, and has become one of the premier film festivals for independent and, primarily, experimental filmmakers to showcase work.

Independent films

In the U.S., Telluride Film Festival, Sundance Film Festival, Austin Film Festival, Austin's South by Southwest, New York City's Tribeca Film Festival, and Slamdance Film Festival are all considered significant festivals for independent film. The Zero Film Festival is significant as the first and only festival exclusive to self-financed filmmakers. The biggest independent film festival in the UK is Raindance Film Festival. The British Urban Film Festival (which specifically caters to Black and minority interests) was officially recognized in the 2020 New Year Honours list.

Subject specific films

A few film festivals have focused on highlighting specific issue topics or subjects. These festivals have included both mainstream and independent films. Some examples include military films, health-related film festivals, and human rights film festivals.

There are festivals, especially in the US, that highlight and promote films that are made by or are about various ethnic groups and nationalities or feature the cinema from a specific foreign country. These include African-Americans, Asian-Americans, Mexican-Americans, Arabs, Italian, German, French, Palestinian, and Native American. The Deauville American Film Festival in France is devoted to the cinema of the United States.

Women's film festivals are also popular.

North American film festivals

The San Francisco International Film Festival, founded by Irving "Bud" Levin started in 1957, is the oldest continuously annual film festival in the United States. It highlights current trends in international filmmaking and video production with an emphasis on work that has not yet secured U.S. distribution.

The Vancouver International Film Festival, founded in 1958, is one of the largest film festivals in North America. It focuses on East Asian film, Canadian film, and nonfiction film. In 2016, there was an audience of 133,000 and 324 films.