Math Is Fun Forum

You are not logged in.

- Topics: Active | Unanswered

#1676 2023-02-23 20:06:50

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,931

Re: Miscellany

1579) International Development Association

Summary

The International Development Association (IDA) (French: Association internationale de développement) is an international financial institution which offers concessional loans and grants to the world's poorest developing countries. The IDA is a member of the World Bank Group and is headquartered in Washington, D.C. in the United States. It was established in 1960 to complement the existing International Bank for Reconstruction and Development by lending to developing countries which suffer from the lowest gross national income, from troubled creditworthiness, or from the lowest per capita income. Together, the International Development Association and International Bank for Reconstruction and Development are collectively generally known as the World Bank, as they follow the same executive leadership and operate with the same staff.

The association shares the World Bank's mission of reducing poverty and aims to provide affordable development financing to countries whose credit risk is so prohibitive that they cannot afford to borrow commercially or from the Bank's other programs. The IDA's stated aim is to assist the poorest nations in growing more quickly, equitably, and sustainably to reduce poverty. The IDA is the single largest provider of funds to economic and human development projects in the world's poorest nations. From 2000 to 2010, it financed projects which recruited and trained 3 million teachers, immunized 310 million children, funded $792 million in loans to 120,000 small and medium enterprises, built or restored 118,000 kilometers of paved roads, built or restored 1,600 bridges, and expanded access to improved water to 113 million people and improved sanitation facilities to 5.8 million people. The IDA has issued a total US$238 billion in loans and grants since its launch in 1960. Thirty-six of the association's borrowing countries have graduated from their eligibility for its concessional lending. However, nine of these countries have relapsed and have not re-graduated.

Details

What is IDA?

The World Bank’s International Development Association (IDA) is one of the largest and most effective platforms for fighting extreme poverty in the world’s lowest income countries.

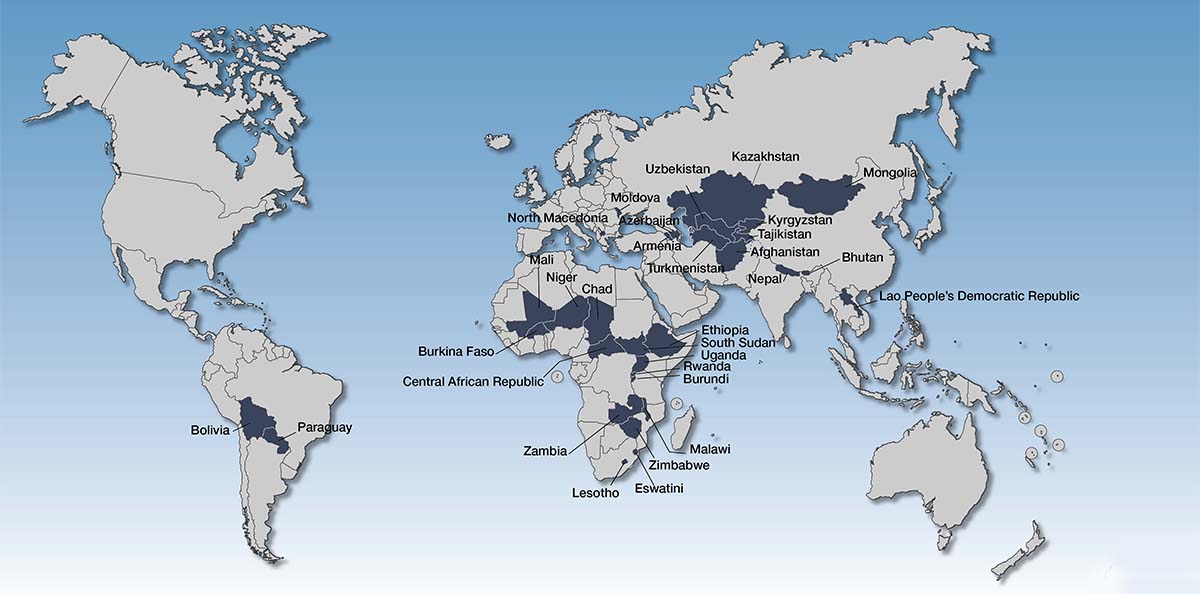

* IDA works in74countries in Africa, East Asia & Pacific, South Asia, Europe & Central Asia, Latin America & Caribbean, and Middle East & North Africa.

* IDA aims to reduce poverty by providing financing and policy advice for programs that boost economic growth, build resilience, and improve the lives of poor people around the world.

* More than half of active IDA countries already receive all, or half, of their IDA resources on grant terms, which carry no repayments at all. Grants are targeted to low-income countries at higher risk of debt distress.

* Over the past 62 years, IDA has provided about $458 billion for investments in 114 countries. IDA also has a strong track record in supporting countries through multiple crises.

How is IDA funded?

* IDA partners and representatives from borrower countries come together every three years to replenish IDA funds and review IDA’s policies. The replenishment consists of contributions from IDA donors, the World Bank, and financing raised from the capital markets.

* Since its founding in 1960, IDA has had 20 replenishment cycles. The current 20th cycle, known as IDA20, was replenished in December 2021. It took place one year earlier than scheduled to meet the unprecedented need brought about by the COVID-19 pandemic in developing countries.

* The $93 billion IDA20 package was made possible by donor contributions from 52 high- and middle-income countries totaling $23.5 billion, with additional financing raised in the capital markets, repayments, and the World Bank’s own contributions.

What does the IDA20 program include?

* IDA is a multi-issue institution and supports a range of development activities thatpave the way toward equality, economic growth, job creation, higher incomes, and better living conditions.

* To help IDA countries address multiple crises and restore their trajectories to the 2030 development agenda, IDA20 will focus on Building Back Better from the Crisis: Towards a Green, Resilient and Inclusive Future. The IDA20 cycle runs from July 1, 2022, to June 30, 2025.

IDA20 will be supported by five special themes:

* Human Capital: Address the current crises and lay the foundations for an inclusive recovery. This theme will continue to help countries manage the pandemic through vaccination programs deployment, scaling up safety nets, and building strong and pandemic-ready health systems.

* Climate: Raise the ambition to build back better and greener; scale up investments in renewable energy, resilience, and mitigation, while tackling issues like nature and biodiversity.

* Gender: Scale up efforts to close social and economic gaps between women and men, boys and girls. It will address issues like economic inclusion, gender-based violence, childcare, and reinforcing women’s land rights.

* Fragility, Conflict, and Violence (FCV): Address drivers of FCV, support policy reforms for refugees, and scale-up regional initiatives in the Sahel, Lake Chad, and Horn of Africa.

* Jobs and Economic Transformation: Enable better jobs for more people through a green, resilient, and inclusive recovery. IDA20 will continue to address macroeconomic instability, support reforms and public investments, and focus on quality infrastructure, renewable energy, and inclusive urban development.

IDA20 will deepen recovery efforts by focusing on four cross-cutting issues:

* Crisis Preparedness: Strengthen national systems that can be adapted quickly, and shock preparedness investments to increase country readiness (e.g., shock-responsive safety nets).

* Debt Sustainability and Transparency: The Sustainable Development Finance Policy will continue to be key to support countries on debt sustainability, transparency, and management.

* Governance and Institutions: Strengthen public institutions to create a conducive environment for a sustainable recovery. Reinforce domestic resource mobilization, digital development, and combat illicit financial flows.

* Technology: Speed up digital transformation with the focus on digital infrastructure, skills, financial services, and businesses. Also address the risks of digital exclusion and support the creation of reliable, cyber secure data systems.

As part of the package, IDA20 expects to deliver the following results:

* essential health, nutrition, and population services for up to 430 million people,

* immunizations for up to 200 million children,

* social safety net programs to up to 375 million people,

* new/improved electricity service to up to 50 million people,

* access to clean cooking for 20 million people, and

* improved access to water sources to up to 20 million people.

What are some recent examples of IDA’s work?

Here are some recent examples of how IDA is empowering countries towards a resilient recovery:

* Across Benin, Malawi, Cote D’Ivoire in Africa, and across South Asia, IDA is working with partners like the World Health Organization to finance vaccine purchase and deployment, and address hesitancy.

* In West Africa, an Ebola-era disease surveillance project has prepared countries to face the COVID-19 health crisis.

* In Tonga, IDA’s emergency funding allowed the government to respond to the eruption and Tsunami in January 2022.

The project strengthened Tonga’s resilience to natural and climate-related risks by facilitating a significant reform of disaster risk management legislation.

* In the Sahel, where climate change is compounding the impacts of COVID-19—IDA is setting up monitoring initiatives, strengthening existing early warning systems, and providing targeted responses to support the agro-pastoral sectors.

* In Yemen, IDA provides critical facilities backed by solar systems to more than 3.2 million people – 51 percent female – including water, educational services, and health care.

* In Bangladesh, IDA helped restart the immunization of children under 12 months after the lockdown due to the COVID-19 outbreak in 2020. It enabled the country to vaccinate 28,585 children in 2020 and 25,864 in 2021 in the camps for the displaced Rohingya people.

* Across Burkina Faso, Chad, Mali, and Niger, IDA’s response helped two million people benefit through cash transfers and food vouchers for basic food needs. Some 30,000 vulnerable farmers received digital coupons to access seeds and fertilizers, and 73,500 people, of which 32,500 were women, were provided temporary jobs through land restoration activities.

Additional Information

International Development Association (IDA) is a United Nations specialized agency affiliated with but legally and financially distinct from the International Bank for Reconstruction and Development (World Bank). It was instituted in September 1960 to make loans on more flexible terms than those of the World Bank. IDA members must be members of the bank, and the bank’s officers serve as IDA’s ex officio officers. Headquarters are in Washington, D.C.

Most of the IDA’s resources have come from the subscriptions and supplementary contributions of member countries, chiefly the 26 wealthiest. Although the wealthier members pay their subscriptions in gold or freely convertible currencies, the less developed nations may pay 10 percent in this form and the remainder in their own currencies.

The International Development Association (IDA) is one of the world’s largest and most effective platforms for tackling extreme poverty. IDA is part of the World Bank, which helps 74 of the world’s poorest countries and is their main source of funding for social services. IDA aims to reduce poverty by providing grants, interest-free loans, and policy advice to programs that promote growth, increase resilience, and improve the lives of the world’s poor. Over the past 60 years, IDA has invested approximately $ 422 billion in 114 countries. Representatives of IDA partners and lender countries meet every three years to replenish IDA resources and review IDA policies. The surcharge includes contributions from IDA donors, contributions from the World Bank and funds raised in capital markets. Since its inception in 1960, IDA has received 19 regular updates. The current round, called IDA19, was completed for $ 82 billion in December 2019, of which $ 23.5 billion came from IDA donors. Pressed by the COVID-19 crisis, the World Bank has allocated nearly half of IDA19’s resources to meet the financial needs of its first financial period (July 2020 – July 2021). In February 2021, representatives of IDA lenders and lender countries agreed that the IDA20 cycle would be shortened by one year and the IDA19 cycle by two years. IDA19 now covers July 2020 – June 2022 and IDA20 covers July 2022 – June 2025.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Online

#1677 2023-02-24 19:25:41

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,931

Re: Miscellany

1580) Isosurface

Gist

An isosurface is a three-dimensional analog of an isoline. It is a surface that represents points of a constant value (e.g. pressure, temperature, velocity, density) within a volume of space; in other words, it is a level set of a continuous function whose domain is 3-space.

Details

An isosurface is a three-dimensional analog of an isoline. It is a surface that represents points of a constant value (e.g. pressure, temperature, velocity, density) within a volume of space; in other words, it is a level set of a continuous function whose domain is 3-space.

The term isoline is also sometimes used for domains of more than 3 dimensions.

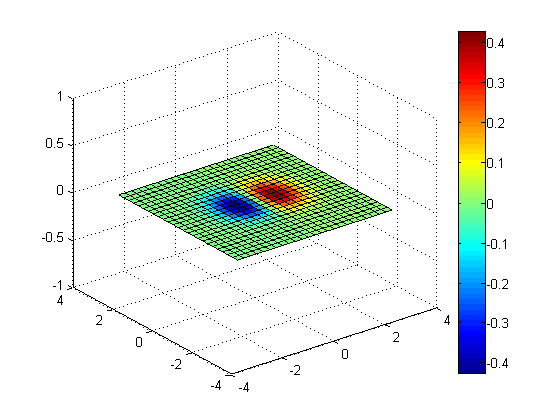

Isosurface of vorticity trailed from a propeller blade. Note that this is an isosurface plotted with a colormapped slice.

Applications

Isosurfaces are normally displayed using computer graphics, and are used as data visualization methods in computational fluid dynamics (CFD), allowing engineers to study features of a fluid flow (gas or liquid) around objects, such as aircraft wings. An isosurface may represent an individual shock wave in supersonic flight, or several isosurfaces may be generated showing a sequence of pressure values in the air flowing around a wing. Isosurfaces tend to be a popular form of visualization for volume datasets since they can be rendered by a simple polygonal model, which can be drawn on the screen very quickly.

In medical imaging, isosurfaces may be used to represent regions of a particular density in a three-dimensional CT scan, allowing the visualization of internal organs, bones, or other structures.

Numerous other disciplines that are interested in three-dimensional data often use isosurfaces to obtain information about pharmacology, chemistry, geophysics and meteorology.

Implementation algorithms:

Marching cubes

The marching cubes algorithm was first published in the 1987 SIGGRAPH proceedings by Lorensen and Cline, and it creates a surface by intersecting the edges of a data volume grid with the volume contour. Where the surface intersects the edge the algorithm creates a vertex. By using a table of different triangles depending on different patterns of edge intersections the algorithm can create a surface. This algorithm has solutions for implementation both on the CPU and on the GPU.

Asymptotic decider

The asymptotic decider algorithm was developed as an extension to marching cubes in order to resolve the possibility of ambiguity in it.

Marching tetrahedra

The marching tetrahedra algorithm was developed as an extension to marching cubes in order to solve an ambiguity in that algorithm and to create higher quality output surface.

Surface nets

The Surface Nets algorithm places an intersecting vertex in the middle of a volume voxel instead of at the edges, leading to a smoother output surface.

Dual contouring

The dual contouring algorithm was first published in the 2002 SIGGRAPH proceedings by Ju and Losasso, developed as an extension to both surface nets and marching cubes. It retains a dual vertex within the voxel but no longer at the center. Dual contouring leverages the position and normal of where the surface crosses the edges of a voxel to interpolate the position of the dual vertex within the voxel. This has the benefit of retaining sharp or smooth surfaces where surface nets often look blocky or incorrectly beveled. Dual contouring often uses surface generation that leverages octrees as an optimization to adapt the number of triangles in output to the complexity of the surface.

Manifold dual contouring

Manifold dual contouring includes an analysis of the octree neighborhood to maintain continuity of the manifold surface.

Examples:

Examples of isosurfaces are 'Metaballs' or 'blobby objects' used in 3D visualisation. A more general way to construct an isosurface is to use the function representation.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Online

#1678 2023-02-25 01:58:50

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,931

Re: Miscellany

1581) Ventriloquism

Summary

Ventriloquism is the art of “throwing” the voice, i.e., speaking in such a manner that the sound seems to come from a distance or from a source other than the speaker. At the same time, the voice is disguised (partly by its heightened pitch), adding to the effect. The art of ventriloquism was formerly supposed to result from a peculiar use of the stomach during inhalation—hence the name, from Latin venter and loqui, “belly-speaking.” In fact, the words are formed in the normal manner, but the breath is allowed to escape slowly, the tones being muffled by narrowing the glottis and the mouth being opened as little as possible, while the tongue is retracted and only its tip moves. This pressure on the vocal cords diffuses the sound; the greater the pressure, the greater the illusion of distance.

A figure, or dummy, is commonly used by the ventriloquist to assist in the deception. The ventriloquist animates the dummy by moving its mouth while his own lips remain still, thereby completing the illusion that the voice is the dummy’s, not his. When not using a dummy, the ventriloquist employs pantomime to direct the attention of his listeners to the location or object from which the sound presumably emanates.

Ventriloquism is of ancient origin. Traces of the art are found in Egyptian and Hebrew archaeology. Eurycles of Athens was the most celebrated of Greek ventriloquists, who were called, after him, eurycleides, as well as engastrimanteis (“belly prophets”). Many peoples are adepts in ventriloquism—e.g., Zulus, Maoris, and Eskimo. The first known ventriloquist as such was Louis Brabant, valet to the French king Francis I in the 16th century. Henry King, called the King’s Whisperer, had the same function for the English king Charles I in the first half of the 17th century. The technique was perfected in the 18th century. It is also well known in India and China. In Europe and the United States, ventriloquism holds a place in popular entertainment. Notable ventriloquists have included Edgar Bergen in the United States and Robert Lamouret in France.

Details

Ventriloquism, or ventriloquy, is a performance act of stagecraft in which a person (a ventriloquist) creates the illusion that their voice is coming from elsewhere, usually a puppeteered prop known as a "dummy". The act of ventriloquism is ventriloquizing, and the ability to do so is commonly called in English the ability to "throw" one's voice.

History:

Origins

Originally, ventriloquism was a religious practice. The name comes from the Latin for 'to speak from the stomach: venter (belly) and loqui (speak). The Greeks called this gastromancy. The noises produced by the stomach were thought to be the voices of the unliving, who took up residence in the stomach of the ventriloquist. The ventriloquist would then interpret the sounds, as they were thought to be able to speak to the dead, as well as foretell the future. One of the earliest recorded group of prophets to use this technique was the Pythia, the priestess at the temple of Apollo in Delphi, who acted as the conduit for the Delphic Oracle.

One of the most successful early gastromancers was Eurykles, a prophet at Athens; gastromancers came to be referred to as Euryklides in his honour. Other parts of the world also have a tradition of ventriloquism for ritual or religious purposes; historically there have been adepts of this practice among the Zulu, Inuit, and Māori peoples.

Emergence as entertainment

Sadler's Wells Theatre in the early 19th century, at a time when ventriloquist acts were becoming increasingly popular.

The shift from ventriloquism as manifestation of spiritual forces toward ventriloquism as entertainment happened in the eighteenth century at travelling funfairs and market towns. An early depiction of a ventriloquist dates to 1754 in England, where Sir John Parnell is depicted in the painting An Election Entertainment by William Hogarth as speaking via his hand. In 1757, the Austrian Baron de Mengen performed with a small doll.

By the late 18th century, ventriloquist performances were an established form of entertainment in England, although most performers "threw their voice" to make it appear that it emanated from far away (known as distant ventriloquism), rather than the modern method of using a puppet (near ventriloquism). A well-known ventriloquist of the period, Joseph Askins, who performed at the Sadler's Wells Theatre in London in the 1790s advertised his act as "curious ad libitum Dialogues between himself and his invisible familiar, Little Tommy". However, other performers were beginning to incorporate dolls or puppets into their performance, notably the Irishman James Burne who "carries in his pocket, an ill-shaped doll, with a broad face, which he exhibits ... as giving utterance to his own childish jargon," and Thomas Garbutt.

The entertainment came of age during the era of the music hall in the United Kingdom and vaudeville in the United States. George Sutton began to incorporate a puppet act into his routine at Nottingham in the 1830s, followed by Fred Neiman later in the century, but it is Fred Russell who is regarded as the father of modern ventriloquism. In 1886, he was offered a professional engagement at the Palace Theatre in London and took up his stage career permanently. His act, based on the cheeky-boy dummy "Coster Joe" that would sit in his lap and 'engage in a dialogue' with him was highly influential for the entertainment format and was adopted by the next generation of performers. A blue plaque has been embedded in a former residence of Russell by the British Heritage Society which reads 'Fred Russell the father of ventriloquism lived here'.

Ventriloquist Edgar Bergen and his best-known sidekick, Charlie McCarthy, in the film Stage Door Canteen (1943)

Fred Russell's successful comedy team format was applied by the next generation of ventriloquists. It was taken forward by the British Arthur Prince with his dummy Sailor Jim, who became one of the highest paid entertainers on the music hall circuit, and by the Americans The Great Lester, Frank Byron Jr., and Edgar Bergen. Bergen popularized the idea of the comedic ventriloquist. Bergen, together with his favourite figure, Charlie McCarthy, hosted a radio program that was broadcast from 1937 to 1956. It was the #1 program on the nights it aired. Bergen continued performing until his death in 1978, and his popularity inspired many other famous ventriloquists who followed him, including Paul Winchell, Jimmy Nelson, David Strassman, Jeff Dunham, Terry Fator, Ronn Lucas, Wayland Flowers, Shari Lewis, Willie Tyler, Jay Johnson, Nina Conti, Paul Zerdin, and Darci Lynne. Another ventriloquist act popular in the United States in the 1950s and 1960s was Señor Wences.

The art of ventriloquism was popularized by Y. K. Padhye and M. M. Roy in South India, who are believed to be the pioneers of this field in India. Y. K. Padhye's son Ramdas Padhye borrowed from him and made the art popular amongst the masses through his performance on television. Ramdas Padhye's name is synonymous with puppet characters like Ardhavatrao (also known as Mr. Crazy), Tatya Vinchu and Bunny the Funny which features in a television advertisement for Lijjat Papad, an Indian snack. Ramdas Padhye's son Satyajit Padhye is also a ventriloquist.

The popularity of ventriloquism fluctuates. In the UK in 2010, there were only 15 full-time professional ventriloquists, down from around 400 in the 1950s and '60s. A number of modern ventriloquists have developed a following as the public taste for live comedy grows. In 2007, Zillah & Totte won the first season of Sweden's Got Talent and became one of Sweden's most popular family/children entertainers. A feature-length documentary about ventriloquism, I'm No Dummy, was released in 2010. Three ventriloquists have won America's Got Talent: Terry Fator in 2007, Paul Zerdin in 2015 and Darci Lynne in 2017.

Vocal technique

One difficulty ventriloquists face is that all the sounds that they make must be made with lips slightly separated. For the labial sounds f, v, b, p, and m, the only choice is to replace them with others. A widely parodied example of this difficulty is the "gottle o' gear", from the reputed inability of less skilled practitioners to pronounce "bottle of beer". If variations of the sounds th, d, t, and n are spoken quickly, it can be difficult for listeners to notice a difference.

Ventriloquist's dummy

Modern ventriloquists use multiple types of puppets in their presentations, ranging from soft cloth or foam puppets (Verna Finly's work is a pioneering example), flexible latex puppets (such as Steve Axtell's creations) and the traditional and familiar hard-headed knee figure (Tim Selberg's mechanized carvings). The classic dummies used by ventriloquists (the technical name for which is ventriloquial figure) vary in size anywhere from twelve inches tall to human-size and larger, with the height usually 34–42 in (86–107 cm). Traditionally, this type of puppet has been made from papier-mâché or wood. In modern times, other materials are often employed, including fiberglass-reinforced resins, urethanes, filled (rigid) latex, and neoprene.

Great names in the history of dummy making include Jeff Dunham, Frank Marshall (the Chicago creator of Bergen's Charlie McCarthy, Nelson's Danny O'Day, and Winchell's Jerry Mahoney), Theo Mack and Son (Mack carved Charlie McCarthy's head), Revello Petee, Kenneth Spencer, Cecil Gough, and Glen & George McElroy. The McElroy brothers' figures are still considered by many ventriloquists as the apex of complex movement mechanics, with as many as fifteen facial and head movements controlled by interior finger keys and switches. Jeff Dunham referred to his McElroy figure Skinny Duggan as "the Stradivarius of dummies." The Juro Novelty Company also manufactured dummies.

Phobia

The plots of some films and television programs are based on "killer toy" dummies that are alive and horrific. These include "The Dummy", a May 4, 1962 episode of The Twilight Zone; Devil Doll; Dead Silence; Zapatlela; Buffy The Vampire Slayer; Goosebumps; Tales from the Crypt; Gotham (the episode "Nothing's Shocking"); Friday the 13th: The Series; Toy Story 4; and Doctor Who in different episodes. This genre has also been satirized on television in ALF (the episode "I'm Your Puppet"); Seinfeld (the episode "The Chicken Roaster"); and the comic strip Monty.

Some psychological horror films have plots based on psychotic ventriloquists who believe their dummies are alive and use them as surrogates to commit frightening acts including murder. Examples of these include the 1978 film Magic and the 1945 anthology film Dead of Night.

Literary examples of frightening ventriloquist dummies include Gerald Kersh's The Horrible Dummy and the story "The Glass Eye" by John Keir Cross. In music, NRBQ's video for their song "Dummy" (2004) features four ventriloquist dummies modelled after the band members who 'lip-sync' the song while wandering around a dark, abandoned house.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Online

#1679 2023-02-26 01:45:22

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,931

Re: Miscellany

1582) Mirage

Summary

Mirage, in optics, is the deceptive appearance of a distant object or objects caused by the bending of light rays (refraction) in layers of air of varying density.

Under certain conditions, such as over a stretch of pavement or desert air heated by intense sunshine, the air rapidly cools with elevation and therefore increases in density and refractive power. Sunlight reflected downward from the upper portion of an object—for example, the top of a camel in the desert—will be directed through the cool air in the normal way. Although the light would not be seen ordinarily because of the angle, it curves upward after it enters the rarefied hot air near the ground, thus being refracted to the observer’s eye as though it originated below the heated surface. A direct image of the camel is seen also because some of the reflected rays enter the eye in a straight line without being refracted. The double image seems to be that of the camel and its upside-down reflection in water. When the sky is the object of the mirage, the land is mistaken for a lake or sheet of water.

Sometimes, as over a body of water, a cool, dense layer of air underlies a heated layer. An opposite phenomenon will then prevail, in which light rays will reach the eye that were originally directed above the line of sight. Thus, an object ordinarily out of view, like a boat below the horizon, will be apparently lifted into the sky. This phenomenon is called looming.

Details

A mirage is a naturally-occurring optical phenomenon in which light rays bend via refraction to produce a displaced image of distant objects or the sky. The word comes to English via the French (se) mirer, from the Latin mirari, meaning "to look at, to wonder at".

Mirages can be categorized as "inferior" (meaning lower), "superior" (meaning higher) and "Fata Morgana", one kind of superior mirage consisting of a series of unusually elaborate, vertically stacked images, which form one rapidly-changing mirage.

In contrast to a hallucination, a mirage is a real optical phenomenon that can be captured on camera, since light rays are actually refracted to form the false image at the observer's location. What the image appears to represent, however, is determined by the interpretive faculties of the human mind. For example, inferior images on land are very easily mistaken for the reflections from a small body of water.

Inferior mirage

In an inferior mirage, the mirage image appears below the real object. The real object in an inferior mirage is the (blue) sky or any distant (therefore bluish) object in that same direction. The mirage causes the observer to see a bright and bluish patch on the ground.

Light rays coming from a particular distant object all travel through nearly the same layers of air, and all are refracted at about the same angle. Therefore, rays coming from the top of the object will arrive lower than those from the bottom. The image is usually upside-down, enhancing the illusion that the sky image seen in the distance is a specular reflection on a puddle of water or oil acting as a mirror.

While the aero-dynamics are highly active, the image of the inferior mirage is stable unlike the fata morgana which can change within seconds. Since warmer air rises while cooler air (being denser) sinks, the layers will mix, causing turbulence. The image will be distorted accordingly; it may vibrate or be stretched vertically (towering) or compressed vertically (stooping). A combination of vibration and extension are also possible. If several temperature layers are present, several mirages may mix, perhaps causing double images. In any case, mirages are usually not larger than about half a degree high (roughly the angular diameter of the Sun and Moon) and are from objects between dozens of meters and a few kilometers away.

Heat haze

A hot-road mirage, in which "fake water" appears on the road, is the most commonly observed instance of an inferior mirage.

Heat haze, also called heat shimmer, refers to the inferior mirage observed when viewing objects through a mass of heated air. Common instances when heat haze occurs include images of objects viewed across asphalt concrete (also known as tarmac) roads and over masonry rooftops on hot days, above and behind fire (as in burning candles, patio heaters, and campfires), and through exhaust gases from jet engines. When appearing on roads due to the hot asphalt, it is often referred to as a "highway mirage". It also occurs in deserts; in that case, it is referred to as a "desert mirage". Both tarmac and sand can become very hot when exposed to the sun, easily being more than 10 °C (18 °F) higher than the air a meter (3.3 feet) above, enough to make conditions suitable to cause the mirage.

Convection causes the temperature of the air to vary, and the variation between the hot air at the surface of the road and the denser cool air above it causes a gradient in the refractive index of the air. This produces a blurred shimmering effect, which hinders the ability to resolve the image and increases when the image is magnified through a telescope or telephoto lens.

Light from the sky at a shallow angle to the road is refracted by the index gradient, making it appear as if the sky is reflected by the road's surface. This might appear as a pool of liquid (usually water, but possibly others, such as oil) on the road, as some types of liquid also reflect the sky. The illusion moves into the distance as the observer approaches the miraged object giving one the same effect as approaching a rainbow.

Heat haze is not related to the atmospheric phenomenon of haze.

Superior mirage

A superior mirage is one in which the mirage image appears to be located above the real object. A superior mirage occurs when the air below the line of sight is colder than the air above it. This unusual arrangement is called a temperature inversion, since warm air above cold air is the opposite of the normal temperature gradient of the atmosphere during the daytime. Passing through the temperature inversion, the light rays are bent down, and so the image appears above the true object, hence the name superior.

Superior mirages are quite common in polar regions, especially over large sheets of ice that have a uniform low temperature. Superior mirages also occur at more moderate latitudes, although in those cases they are weaker and tend to be less smooth and stable. For example, a distant shoreline may appear to tower and look higher (and, thus, perhaps closer) than it really is. Because of the turbulence, there appear to be dancing spikes and towers. This type of mirage is also called the Fata Morgana or hafgerðingar in the Icelandic language.

A superior mirage can be right-side up or upside-down, depending on the distance of the true object and the temperature gradient. Often the image appears as a distorted mixture of up and down parts.

Since Earth is round, if the downward bending curvature of light rays is about the same as the curvature of Earth, light rays can travel large distances, including from beyond the horizon. This was observed and documented in 1596, when a ship in search of the Northeast passage became stuck in the ice at Novaya Zemlya, above the Arctic Circle. The Sun appeared to rise two weeks earlier than expected; the real Sun had still been below the horizon, but its light rays followed the curvature of Earth. This effect is often called a Novaya Zemlya mirage. For every 111.12 kilometres (69.05 mi) that light rays travel parallel to Earth's surface, the Sun will appear 1° higher on the horizon. The inversion layer must have just the right temperature gradient over the whole distance to make this possible.

In the same way, ships that are so far away that they should not be visible above the geometric horizon may appear on or even above the horizon as superior mirages. This may explain some stories about flying ships or coastal cities in the sky, as described by some polar explorers. These are examples of so-called Arctic mirages, or hillingar in Icelandic.

If the vertical temperature gradient is +12.9 °C (23.2 °F) per 100 meters/330 feet (where the positive sign means the temperature increases at higher altitudes) then horizontal light rays will just follow the curvature of Earth, and the horizon will appear flat. If the gradient is less (as it almost always is) the rays are not bent enough and get lost in space, which is the normal situation of a spherical, convex "horizon".

In some situations, distant objects can be elevated or lowered, stretched or shortened with no mirage involved.

Fata Morgana

A Fata Morgana (the name comes from the Italian translation of Morgan le Fay, the fairy, shapeshifting half-sister of King Arthur) is a very complex superior mirage. It appears with alternations of compressed and stretched areas, erect images, and inverted images. A Fata Morgana is also a fast-changing mirage.

Fata Morgana mirages are most common in polar regions, especially over large sheets of ice with a uniform low temperature, but they can be observed almost anywhere. In polar regions, a Fata Morgana may be observed on cold days; in desert areas and over oceans and lakes, a Fata Morgana may be observed on hot days. For a Fata Morgana, temperature inversion has to be strong enough that light rays' curvatures within the inversion are stronger than the curvature of Earth.

The rays will bend and form arcs. An observer needs to be within an atmospheric duct to be able to see a Fata Morgana. Fata Morgana mirages may be observed from any altitude within Earth's atmosphere, including from mountaintops or airplanes.

Distortions of image and bending of light can produce spectacular effects. In his book Pursuit: The Chase and Sinking of the "Bismarck", Ludovic Kennedy describes an incident that allegedly took place below the Denmark Strait during 1941, following the sinking of the Hood. The Bismarck, while pursued by the British cruisers Norfolk and Suffolk, passed out of sight into a sea mist. Within a matter of seconds, the ship re-appeared steaming toward the British ships at high speed. In alarm the cruisers separated, anticipating an imminent attack, and observers from both ships watched in astonishment as the German battleship fluttered, grew indistinct and faded away. Radar watch during these events indicated that the Bismarck had in fact made no change to her course.

Night-time mirages

The conditions for producing a mirage can occur at night as well as during the day. Under some circumstances mirages of astronomical objects and mirages of lights from moving vehicles, aircraft, ships, buildings, etc. can be observed at night.

Mirage of astronomical objects

A mirage of an astronomical object is a naturally occurring optical phenomenon in which light rays are bent to produce distorted or multiple images of an astronomical object. Mirages can be observed for such astronomical objects as the Sun, the Moon, the planets, bright stars, and very bright comets. The most commonly observed are sunset and sunrise mirages.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Online

#1680 2023-02-26 23:24:12

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,931

Re: Miscellany

1583) Sternum

Summary

Sternum, also called breastbone, in the anatomy of tetrapods (four-limbed vertebrates), is an elongated bone in the centre of the chest that articulates with and provides support for the clavicles (collarbones) of the shoulder girdle and for the ribs. Its origin in evolution is unclear. A sternum appears in certain salamanders; it is present in most other tetrapods but lacking in legless lizards, snakes, and turtles (in which the shell provides needed support). In birds an enlarged keel develops, to which flight muscles are attached; the sternum of the bat is also keeled as an adaptation for flight.

In mammals the sternum is divided into three parts, from anterior to posterior: (1) the manubrium, which articulates with the clavicles and first ribs; (2) the mesosternum, often divided into a series of segments, the sternebrae, to which the remaining true ribs are attached; and (3) the posterior segment, called the xiphisternum. In humans the sternum is elongated and flat; it may be felt from the base of the neck to the pit of the abdomen. The manubrium is roughly trapezoidal, with depressions where the clavicles and the first pair of ribs join. The mesosternum, or body, consists of four sternebrae that fuse during childhood or early adulthood. The mesosternum is narrow and long, with articular facets for ribs along its sides. The xiphisternum is reduced to a small, usually cartilaginous xiphoid (“sword-shaped”) process. The sternum ossifies from several centres. The xiphoid process may ossify and fuse to the body in middle age; the joint between manubrium and mesosternum remains open until old age.

Details

The sternum or breastbone is a long flat bone located in the central part of the chest. It connects to the ribs via cartilage and forms the front of the rib cage, thus helping to protect the heart, lungs, and major blood vessels from injury. Shaped roughly like a necktie, it is one of the largest and longest flat bones of the body. Its three regions are the manubrium, the body, and the xiphoid process. The word sternum originates from Ancient Greek 'chest'.

Structure

The sternum is a narrow, flat bone, forming the middle portion of the front of the chest. The top of the sternum supports the clavicles (collarbones) and its edges join with the costal cartilages of the first two pairs of ribs. The inner surface of the sternum is also the attachment of the sternopericardial ligaments. Its top is also connected to the sternocleidomastoid muscle. The sternum consists of three main parts, listed from the top:

* Manubrium

* Body (gladiolus)

* Xiphoid process

In its natural position, the sternum is angled obliquely, downward and forward. It is slightly convex in front and concave behind; broad above, shaped like a "T", becoming narrowed at the point where the manubrium joins the body, after which it again widens a little to below the middle of the body, and then narrows to its lower extremity. In adults the sternum is on average about 1.7 cm longer in the male than in the female.

Manubrium

The manubrium (Latin for 'handle') is the broad upper part of the sternum. It has a quadrangular shape, narrowing from the top, which gives it four borders. The suprasternal notch (jugular notch) is located in the middle at the upper broadest part of the manubrium. This notch can be felt between the two clavicles. On either side of this notch are the right and left clavicular notches.

The manubrium joins with the body of the sternum, the clavicles and the cartilages of the first pair of ribs. The inferior border, oval and rough, is covered with a thin layer of cartilage for articulation with the body. The lateral borders are each marked above by a depression for the first costal cartilage, and below by a small facet, which, with a similar facet on the upper angle of the body, forms a notch for the reception of the costal cartilage of the second rib. Between the depression for the first costal cartilage and the demi-facet for the second is a narrow, curved edge, which slopes from above downward towards the middle. Also, the superior sternopericardial ligament attaches the pericardium to the posterior side of the manubrium.

Body

The body, or gladiolus, is the longest sternal part. It is flat and considered to have only a front and back surface. It is flat on the front, directed upward and forward, and marked by three transverse ridges which cross the bone opposite the third, fourth, and fifth articular depressions. The pectoralis major attaches to it on either side. At the junction of the third and fourth parts of the body is occasionally seen an orifice, the sternal foramen, of varying size and form. The posterior surface, slightly concave, is also marked by three transverse lines, less distinct, however, than those in front; from its lower part, on either side, the transversus thoracis takes origin.

The sternal angle is located at the point where the body joins the manubrium. The sternal angle can be felt at the point where the sternum projects farthest forward. However, in some people the sternal angle is concave or rounded. During physical examinations, the sternal angle is a useful landmark because the second rib attaches here.

Each outer border, at its superior angle, has a small facet, which with a similar facet on the manubrium, forms a cavity for the cartilage of the second rib; below this are four angular depressions which receive the cartilages of the third, fourth, fifth, and sixth ribs. The inferior angle has a small facet, which, with a corresponding one on the xiphoid process, forms a notch for the cartilage of the seventh rib. These articular depressions are separated by a series of curved interarticular intervals, which diminish in length from above downward, and correspond to the intercostal spaces. Most of the cartilages belonging to the true ribs, articulate with the sternum at the lines of junction of its primitive component segments. This is well seen in some other vertebrates, where the parts of the bone remain separated for longer.

The upper border is oval and articulates with the manubrium, at the sternal angle. The lower border is narrow, and articulates with the xiphoid process.

Xiphoid process

Located at the inferior end of the sternum is the pointed xiphoid process. Improperly performed chest compressions during cardiopulmonary resuscitation can cause the xiphoid process to snap off, driving it into the liver which can cause a fatal hemorrhage.

The sternum is composed of highly vascular tissue, covered by a thin layer of compact bone which is thickest in the manubrium between the articular facets for the clavicles. The inferior sternopericardial ligament attaches the pericardium to the posterior xiphoid process.

Joints

The cartilages of the top five ribs join with the sternum at the sternocostal joints. The right and left clavicular notches articulate with the right and left clavicles, respectively. The costal cartilage of the second rib articulates with the sternum at the sternal angle making it easy to locate.

The transversus thoracis muscle is innervated by one of the intercostal nerves and superiorly attaches at the posterior surface of the lower sternum. Its inferior attachment is the internal surface of costal cartilages two through six and works to depress the ribs.

Development

The sternum develops from two cartilaginous bars one on the left and one on the right, connected with the cartilages of the ribs on each side. These two bars fuse together along the middle to form the cartilaginous sternum which is ossified from six centers: one for the manubrium, four for the body, and one for the xiphoid process.

The ossification centers appear in the intervals between the articular depressions for the costal cartilages, in the following order: in the manubrium and first piece of the body, during the sixth month of fetal life; in the second and third pieces of the body, during the seventh month of fetal life; in its fourth piece, during the first year after birth; and in the xiphoid process, between the fifth and eighteenth years.

The centers make their appearance at the upper parts of the segments, and proceed gradually downward. To these may be added the occasional existence of two small episternal centers, which make their appearance one on either side of the jugular notch; they are probably vestiges of the episternal bone of the monotremata and lizards.

Occasionally some of the segments are formed from more than one center, the number and position of which vary. Thus, the first piece may have two, three, or even six centers.

When two are present, they are generally situated one above the other, the upper being the larger; the second piece has seldom more than one; the third, fourth, and fifth pieces are often formed from two centers placed laterally, the irregular union of which explains the rare occurrence of the sternal foramen, or of the vertical fissure which occasionally intersects this part of the bone constituting the malformation known as fissura sterni; these conditions are further explained by the manner in which the cartilaginous sternum is formed.

More rarely still the upper end of the sternum may be divided by a fissure. Union of the various centers of the body begins about puberty, and proceeds from below upward; by the age of 25 they are all united.

The xiphoid process may become joined to the body before the age of thirty, but this occurs more frequently after forty; on the other hand, it sometimes remains ununited in old age. In advanced life the manubrium is occasionally joined to the body by bone. When this takes place, however, the bony tissue is generally only superficial, the central portion of the intervening cartilage remaining unossified.

In early life, the sternum's body is divided into four segments, not three, called sternebrae (singular: sternebra).

Variations

In 2.5–13.5% of the population, a foramen known as sternal foramen may be presented at the lower third of the sternal body. In extremely rare cases, multiple foramina may be observed. Fusion of the manubriosternal joint also occurs in around 5% of the population. Small ossicles known as episternal ossicles may also be present posterior to the superior end of the manubrium. Another variant called suprasternal tubercle is formed when the episternal ossicles fuse with the manubrium.

Clinical significance

Because the sternum contains bone marrow, it is sometimes used as a site for bone marrow biopsy. In particular, patients with a high BMI (obese or grossly overweight) may present with excess tissue that makes access to traditional marrow biopsy sites such as the pelvis difficult.

Sternal opening

A somewhat rare congenital disorder of the sternum sometimes referred to as an anatomical variation is a sternal foramen, a single round hole in the sternum that is present from birth and usually is off-centered to the right or left, commonly forming in the 2nd, 3rd, and 4th segments of the breastbone body. Congenital sternal foramina can often be mistaken for bullet holes. They are usually without symptoms but can be problematic if acupuncture in the area is intended.

Fractures

Fractures of the sternum are rather uncommon. They may result from trauma, such as when a driver's chest is forced into the steering column of a car in a car accident. A fracture of the sternum is usually a comminuted fracture. The most common site of sternal fractures is at the sternal angle. Some studies reveal that repeated punches or continual beatings, sometimes called "breastbone punches", to the sternum area have also caused fractured sternums. Those are known to have occurred in contact sports such as hockey and football. Sternal fractures are frequently associated with underlying injuries such as pulmonary contusions, or bruised lung tissue.

Dislocation

A manubriosternal dislocation is rare and usually caused by severe trauma. It may also result from minor trauma where there is a precondition of arthritis.

Sternotomy

The breastbone is sometimes cut open (a median sternotomy) to gain access to the thoracic contents when performing cardiothoracic surgery.

Resection

The sternum can be totally removed (resected) as part of a radical surgery, usually to surgically treat a malignancy, either with or without a mediastinal lymphadenectomy (Current Procedural Terminology codes # 21632 and # 21630, respectively).

Bifid sternum or sternal cleft

A bifid sternum is an extremely rare congenital abnormality caused by the fusion failure of the sternum. This condition results in sternal cleft which can be observed at birth without any symptom.

Other animals

The sternum, in vertebrate anatomy, is a flat bone that lies in the middle front part of the rib cage. It is endochondral in origin. It probably first evolved in early tetrapods as an extension of the pectoral girdle; it is not found in fish. In amphibians and reptiles it is typically a shield-shaped structure, often composed entirely of cartilage. It is absent in both turtles and snakes. In birds it is a relatively large bone and typically bears an enormous projecting keel to which the flight muscles are attached. Only in mammals does the sternum take on the elongated, segmented form seen in humans.

Arthropods

In arachnids, the sternum is the ventral (lower) portion of the cephalothorax. It consists of a single sclerite situated between the coxa, opposite the carapace.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Online

#1681 2023-02-27 16:16:34

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,931

Re: Miscellany

1584) Winter

Summary

Winter is the coldest season of the year, between autumn and spring; the name comes from an old Germanic word that means “time of water” and refers to the rain and snow of winter in middle and high latitudes. In the Northern Hemisphere it is commonly regarded as extending from the winter solstice (year’s shortest day), December 21 or 22, to the vernal equinox (day and night equal in length), March 20 or 21, and in the Southern Hemisphere from June 21 or 22 to September 22 or 23. The low temperatures associated with winter occur only in middle and high latitudes; in equatorial regions, temperatures are almost uniformly high throughout the year. For physical causes of the seasons, see season.

The concept of winter in European languages is associated with the season of dormancy, particularly in relation to crops; some plants die, leaving their seeds, and others merely cease growth until spring. Many animals also become dormant, especially those that hibernate; numerous insects die.

Details

Winter is the coldest season of the year in polar and temperate climates. It occurs after autumn and before spring. The tilt of Earth's axis causes seasons; winter occurs when a hemisphere is oriented away from the Sun. Different cultures define different dates as the start of winter, and some use a definition based on weather.

When it is winter in the Northern Hemisphere, it is summer in the Southern Hemisphere, and vice versa. In many regions, winter brings snow and freezing temperatures. The moment of winter solstice is when the Sun's elevation with respect to the North or South Pole is at its most negative value; that is, the Sun is at its farthest below the horizon as measured from the pole. The day on which this occurs has the shortest day and the longest night, with day length increasing and night length decreasing as the season progresses after the solstice.

The earliest sunset and latest sunrise dates outside the polar regions differ from the date of the winter solstice and depend on latitude. They differ due to the variation in the solar day throughout the year caused by the Earth's elliptical orbit.

Cause

The tilt of the Earth's axis relative to its orbital plane plays a large role in the formation of weather. The Earth is tilted at an angle of 23.44° to the plane of its orbit, causing different latitudes to directly face the Sun as the Earth moves through its orbit. This variation brings about seasons. When it is winter in the Northern Hemisphere, the Southern Hemisphere faces the Sun more directly and thus experiences warmer temperatures than the Northern Hemisphere. Conversely, winter in the Southern Hemisphere occurs when the Northern Hemisphere is tilted more toward the Sun. From the perspective of an observer on the Earth, the winter Sun has a lower maximum altitude in the sky than the summer Sun.

During winter in either hemisphere, the lower altitude of the Sun causes the sunlight to hit the Earth at an oblique angle. Thus a lower amount of solar radiation strikes the Earth per unit of surface area. Furthermore, the light must travel a longer distance through the atmosphere, allowing the atmosphere to dissipate more heat. Compared with these effects, the effect of the changes in the distance of the Earth from the Sun (due to the Earth's elliptical orbit) is negligible.

The manifestation of the meteorological winter (freezing temperatures) in the northerly snow–prone latitudes is highly variable depending on elevation, position versus marine winds and the amount of precipitation. For instance, within Canada (a country of cold winters), Winnipeg on the Great Plains, a long way from the ocean, has a January high of −11.3 °C (11.7 °F) and a low of −21.4 °C (−6.5 °F).

In comparison, Vancouver on the west coast with a marine influence from moderating Pacific winds has a January low of 1.4 °C (34.5 °F) with days well above freezing at 6.9 °C (44.4 °F). Both places are at 49°N latitude, and in the same western half of the continent. A similar but less extreme effect is found in Europe: in spite of their northerly latitude, the British Isles have not a single non-mountain weather station with a below-freezing mean January temperature.

Additional Information

Winter is one of the four seasons and it is the coldest time of the year. The days are shorter, and the nights are longer. Winter comes after autumn and before spring.

Winter begins at the winter solstice. In the Northern Hemisphere the winter solstice is usually December 21 or December 22. In the Southern Hemisphere the winter solstice is usually June 21 or June 22.

Some animals hibernate during this season. In temperate climates there are no leaves on deciduous trees. People wear warm clothing, and eat food that was grown earlier. Many places have snow in winter, and some people use sleds or skis. Holidays in winter for many countries include Christmas and New Year's Day.

The name comes from an old Germanic word that means "time of water" and refers to the rain and snow of winter in middle and high latitudes.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Online

#1682 2023-02-28 16:25:58

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,931

Re: Miscellany

1585) Summer

Summary

Summer is the warmest season of the year, between spring and autumn. In the Northern Hemisphere, it is usually defined as the period between the summer solstice (year’s longest day), June 21 or 22, and the autumnal equinox (day and night equal in length), September 22 or 23; and in the Southern Hemisphere, as the period between December 22 or 23 and March 20 or 21. The temperature contrast between summer and the other seasons exists only in middle and high latitudes; temperatures in the equatorial regions generally vary little from month to month. For physical causes of the seasons, see season.

The concept of summer in European languages is associated with growth and maturity, especially that of cultivated plants, and indeed summer is the season of greatest plant growth in regions with sufficient summer rainfall. Festivals and rites have been used in many cultures to celebrate summer in recognition of its importance in food production.

A period of exceptionally hot weather, often with high humidity, during the summer is called a heat wave. Such an occurrence in the temperate regions of the Northern Hemisphere in the latter part of summer is sometimes called the dog days.

Details

Summer is the hottest of the four temperate seasons, occurring after spring and before autumn. At or centred on the summer solstice, daylight hours are longest and darkness hours are shortest, with day length decreasing as the season progresses after the solstice. The earliest sunrises and latest sunsets also occur near the date of the solstice. The date of the beginning of summer varies according to climate, tradition, and culture. When it is summer in the Northern Hemisphere, it is winter in the Southern Hemisphere, and vice versa.

Timing

From an astronomical view, the equinoxes and solstices would be the middle of the respective seasons, but sometimes astronomical summer is defined as starting at the solstice, the time of maximal insolation, often identified with the 21st day of June or December. By solar reckoning, summer instead starts on May Day and the summer solstice is Midsummer. A variable seasonal lag means that the meteorological centre of the season, which is based on average temperature patterns, occurs several weeks after the time of maximal insolation.

The meteorological convention is to define summer as comprising the months of June, July, and August in the northern hemisphere and the months of December, January, and February in the southern hemisphere. Under meteorological definitions, all seasons are arbitrarily set to start at the beginning of a calendar month and end at the end of a month. This meteorological definition of summer also aligns with the commonly viewed notion of summer as the season with the longest (and warmest) days of the year, in which daylight predominates.

The meteorological reckoning of seasons is used in countries including Australia, New Zealand, Austria, Denmark, Russia and Japan. It is also used by many people in the United Kingdom and Canada. In Ireland, the summer months according to the national meteorological service, Met Éireann, are June, July and August. By the Irish calendar, summer begins on 1 May (Beltane) and ends on 31 July (Lughnasadh).

Days continue to lengthen from equinox to solstice and summer days progressively shorten after the solstice, so meteorological summer encompasses the build-up to the longest day and a diminishing thereafter, with summer having many more hours of daylight than spring. Reckoning by hours of daylight alone, summer solstice marks the midpoint, not the beginning, of the seasons. Midsummer takes place over the shortest night of the year, which is the summer solstice, or on a nearby date that varies with tradition.

Where a seasonal lag of half a season or more is common, reckoning based on astronomical markers is shifted half a season. By this method, in North America, summer is the period from the summer solstice (usually 20 or 21 June in the Northern Hemisphere) to the autumn equinox.

Reckoning by cultural festivals, the summer season in the United States is traditionally regarded as beginning on Memorial Day weekend (the last weekend in May) and ending on Labor Day (the first Monday in September), more closely in line with the meteorological definition for the parts of the country that have four-season weather. The similar Canadian tradition starts summer on Victoria Day one week prior (although summer conditions vary widely across Canada's expansive territory) and ends, as in the United States, on Labour Day.

In some Southern Hemisphere countries such as Brazil, Argentina, South Africa, Australia and New Zealand, summer is associated with the Christmas and New Year holidays. Many families take extended holidays for two or three weeks or longer during summer.

In Australia and New Zealand, summer begins on 1 December and ends on 28 February (29 February in leap years).

In Chinese astronomy, summer starts on or around 5 May, with the jiéqì (solar term) known as lìxià, i.e. "establishment of summer", and it ends on or around 6 August.

In southern and southeast Asia, where the monsoon occurs, summer is more generally defined as lasting from March, April, May and June, the warmest time of the year, ending with the onset of the monsoon rains.

Because the temperature lag is shorter in the oceanic temperate southern hemisphere, most countries in this region use the meteorological definition with summer starting on 1 December and ending on the last day of February.

Additional Information

Summer is one of the four seasons. It is the hottest season of the year. In some places, summer is the wettest season (with the most rain), and in other places, it is a dry season. Four seasons are found in areas which are not too hot or too cold. Summer happens to the north and south sides of the Earth at opposite times of the year. In the north part of the world, summer takes place between the months of June and September, and in the south part of the world, it takes place between December and March. This is because when the north part of the Earth points towards the Sun, the south part points away.

Many people in rich countries travel in summer, to seaside resorts, beaches, camps or picnics. In some countries, they celebrate things in the summer as well as enjoying cool drinks. Other countries get snow in the summer just like winter.

Summer is usually known as the hottest season.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Online

#1683 2023-03-01 02:03:27

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,931

Re: Miscellany

1586) Spring (season)

Summary

Spring, in climatology, is season of the year between winter and summer during which temperatures gradually rise. It is generally defined in the Northern Hemisphere as extending from the vernal equinox (day and night equal in length), March 20 or 21, to the summer solstice (year’s longest day), June 21 or 22, and in the Southern Hemisphere from September 22 or 23 to December 22 or 23. The spring temperature transition from winter cold to summer heat occurs only in middle and high latitudes; near the Equator, temperatures vary little during the year. Spring is very short in the polar regions. For physical causes of the seasons, see season.

In many cultures spring has been celebrated with rites and festivals revolving around its importance in food production. In European languages, the concept of spring is associated with the sowing of crops. During this time of the year all plants, including cultivated ones, begin growth anew after the dormancy of winter. Animals are greatly affected, too: they come out of their winter dormancy or hibernation and begin their nesting and reproducing activities, and birds migrate poleward in response to the warmer temperatures.

Details

Spring, also known as springtime, is one of the four temperate seasons, succeeding winter and preceding summer. There are various technical definitions of spring, but local usage of the term varies according to local climate, cultures and customs. When it is spring in the Northern Hemisphere, it is autumn in the Southern Hemisphere and vice versa. At the spring (or vernal) equinox, days and nights are approximately twelve hours long, with daytime length increasing and nighttime length decreasing as the season progresses until the Summer Solstice in June (Northern Hemisphere) and December (Southern Hemisphere).

Spring and "springtime" refer to the season, and also to ideas of rebirth, rejuvenation, renewal, resurrection and regrowth. Subtropical and tropical areas have climates better described in terms of other seasons, e.g. dry or wet, monsoonal or cyclonic. Cultures may have local names for seasons which have little equivalence to the terms originating in Europe.

Meteorological reckoning

Meteorologists generally define four seasons in many climatic areas: spring, summer, autumn (fall), and winter. These are determined by the values of their average temperatures on a monthly basis, with each season lasting three months. The three warmest months are by definition summer, the three coldest months are winter, and the intervening gaps are spring and autumn. Meteorological spring can therefore, start on different dates in different regions.

In the US and UK, spring months are March, April, and May.

In Australia and New Zealand, spring begins on 22nd or 23rd of September and ends on 21 December.

In Ireland, following the Irish calendar, spring is often defined as February, March, and April.

In Sweden, meteorologists define the beginning of spring as the first occasion on which the average 24 hours temperature exceeds zero degrees Celsius for seven consecutive days, thus the date varies with latitude and elevation.

In Brazil, spring months are September, October, November.

Astronomical and solar reckoning

In the Northern Hemisphere (e.g. Germany, the United States, Canada, and the UK), the astronomical vernal equinox (varying between 19 and 21 March) can be taken to mark the first day of spring with the summer solstice (around 21 June) marked as first day of summer. By solar reckoning, Spring is held to begin 1 February until the first day of Summer on May Day, with the summer solstice being marked as Midsummer instead of the beginning of Summer as with astronomical reckoning.

In Persian culture the first day of spring is the first day of the first month (called Farvardin) which begins on 20 or 21 March.

In the traditional Chinese calendar, the "spring" season consists of the days between Lichun (3–5 February), taking Chunfen (20–22 March) as its midpoint, then ending at Lixia (5–7 May). Similarly, according to the Celtic tradition, which is based solely on daylight and the strength of the noon sun, spring begins in early February (near Imbolc or Candlemas) and continues until early May (Beltane).

The spring season in India is culturally in the months of March and April, with an average temperature of approx 32 °C. Some people in India especially from Karnataka state celebrate their new year in spring, Ugadi.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Online

#1684 2023-03-02 01:37:22

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,931

Re: Miscellany

1587) Stainless steel

Summary

Stainless steel is any one of a family of alloy steels usually containing 10 to 30 percent chromium. In conjunction with low carbon content, chromium imparts remarkable resistance to corrosion and heat. Other elements, such as nickel, molybdenum, titanium, aluminum, niobium, copper, nitrogen, sulfur, phosphorus, or selenium, may be added to increase corrosion resistance to specific environments, enhance oxidation resistance, and impart special characteristics.

Most stainless steels are first melted in electric-arc or basic oxygen furnaces and subsequently refined in another steelmaking vessel, mainly to lower the carbon content. In the argon-oxygen decarburization process, a mixture of oxygen and argon gas is injected into the liquid steel. By varying the ratio of oxygen and argon, it is possible to remove carbon to controlled levels by oxidizing it to carbon monoxide without also oxidizing and losing expensive chromium. Thus, cheaper raw materials, such as high-carbon ferrochromium, may be used in the initial melting operation.

There are more than 100 grades of stainless steel. The majority are classified into five major groups in the family of stainless steels: austenitic, ferritic, martensitic, duplex, and precipitation-hardening. Austenitic steels, which contain 16 to 26 percent chromium and up to 35 percent nickel, usually have the highest corrosion resistance. They are not hardenable by heat treatment and are nonmagnetic. The most common type is the 18/8, or 304, grade, which contains 18 percent chromium and 8 percent nickel. Typical applications include aircraft and the dairy and food-processing industries. Standard ferritic steels contain 10.5 to 27 percent chromium and are nickel-free; because of their low carbon content (less than 0.2 percent), they are not hardenable by heat treatment and have less critical anticorrosion applications, such as architectural and auto trim. Martensitic steels typically contain 11.5 to 18 percent chromium and up to 1.2 percent carbon with nickel sometimes added. They are hardenable by heat treatment, have modest corrosion resistance, and are employed in cutlery, surgical instruments, wrenches, and turbines. Duplex stainless steels are a combination of austenitic and ferritic stainless steels in equal amounts; they contain 21 to 27 percent chromium, 1.35 to 8 percent nickel, 0.05 to 3 percent copper, and 0.05 to 5 percent molybdenum. Duplex stainless steels are stronger and more resistant to corrosion than austenitic and ferritic stainless steels, which makes them useful in storage-tank construction, chemical processing, and containers for transporting chemicals. Precipitation-hardening stainless steel is characterized by its strength, which stems from the addition of aluminum, copper, and niobium to the alloy in amounts less than 0.5 percent of the alloy’s total mass. It is comparable to austenitic stainless steel with respect to its corrosion resistance, and it contains 15 to 17.5 percent chromium, 3 to 5 percent nickel, and 3 to 5 percent copper. Precipitation-hardening stainless steel is used in the construction of long shafts.

Details

Stainless steel is an alloy of iron that is resistant to rusting and corrosion. It contains at least 11% chromium and may contain elements such as carbon, other nonmetals and metals to obtain other desired properties. Stainless steel's resistance to corrosion results from the chromium, which forms a passive film that can protect the material and self-heal in the presence of oxygen.

The alloy's properties, such as luster and resistance to corrosion, are useful in many applications. Stainless steel can be rolled into sheets, plates, bars, wire, and tubing. These can be used in cookware, cutlery, surgical instruments, major appliances, vehicles, construction material in large buildings, industrial equipment (e.g., in paper mills, chemical plants, water treatment), and storage tanks and tankers for chemicals and food products.

The biological cleanability of stainless steel is superior to both aluminium and copper, and comparable to glass. Its cleanability, strength, and corrosion resistance have prompted the use of stainless steel in pharmaceutical and food processing plants.

Different types of stainless steel are labeled with an AISI three-digit number. The ISO 15510 standard lists the chemical compositions of stainless steels of the specifications in existing ISO, ASTM, EN, JIS, and GB standards in a useful interchange table.

Properties:

Conductivity

Like steel, stainless steels are relatively poor conductors of electricity, with significantly lower electrical conductivities than copper. In particular, the electrical contact resistance (ECR) of stainless steel arises as a result of the dense protective oxide layer and limits its functionality in applications as electrical connectors. Copper alloys and nickel-coated connectors tend to exhibit lower ECR values, and are preferred materials for such applications. Nevertheless, stainless steel connectors are employed in situations where ECR poses a lower design criteria and corrosion resistance is required, for example in high temperatures and oxidizing environments.

Melting point

As with all other alloys, the melting point of stainless steel is expressed in the form of a range of temperatures, and not a singular temperature. This temperature range goes from 1,400 to 1,530 °C (2,550 to 2,790 °F) depending on the specific consistency of the alloy in question.

Magnetism

Martensitic, duplex and ferritic stainless steels are magnetic, while austenitic stainless steel is usually non-magnetic. Ferritic steel owes its magnetism to its body-centered cubic crystal structure, in which iron atoms are arranged in cubes (with one iron atom at each corner) and an additional iron atom in the center. This central iron atom is responsible for ferritic steel's magnetic properties. This arrangement also limits the amount of carbon the steel can absorb to around 0.025%. Grades with low coercive field have been developed for electro-valves used in household appliances and for injection systems in internal combustion engines. Some applications require non-magnetic materials, such as magnetic resonance imaging. Austenitic stainless steels, which are usually non-magnetic, can be made slightly magnetic through work hardening. Sometimes, if austenitic steel is bent or cut, magnetism occurs along the edge of the stainless steel because the crystal structure rearranges itself.

Corrosion

The addition of nitrogen also improves resistance to pitting corrosion and increases mechanical strength. Thus, there are numerous grades of stainless steel with varying chromium and molybdenum contents to suit the environment the alloy must endure. Corrosion resistance can be increased further by the following means:

* increasing chromium content to more than 11%

* adding nickel to at least 8%

* adding molybdenum (which also improves resistance to pitting corrosion)

Wear

Galling, sometimes called cold welding, is a form of severe adhesive wear, which can occur when two metal surfaces are in relative motion to each other and under heavy pressure. Austenitic stainless steel fasteners are particularly susceptible to thread galling, though other alloys that self-generate a protective oxide surface film, such as aluminium and titanium, are also susceptible. Under high contact-force sliding, this oxide can be deformed, broken, and removed from parts of the component, exposing the bare reactive metal. When the two surfaces are of the same material, these exposed surfaces can easily fuse. Separation of the two surfaces can result in surface tearing and even complete seizure of metal components or fasteners. Galling can be mitigated by the use of dissimilar materials (bronze against stainless steel) or using different stainless steels (martensitic against austenitic). Additionally, threaded joints may be lubricated to provide a film between the two parts and prevent galling. Nitronic 60, made by selective alloying with manganese, silicon, and nitrogen, has demonstrated a reduced tendency to gall.

Density

The density of stainless steel can be somewhere between 7,500kg/m^3 to 8,000kg/m^3 depending on the alloy.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Online