Math Is Fun Forum

You are not logged in.

- Topics: Active | Unanswered

#501 2019-09-13 00:24:21

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,200

Re: Miscellany

404) Tea

Tea, beverage produced by steeping in freshly boiled water the young leaves and leaf buds of the tea plant, Camellia sinensis. Two principal varieties are used, the small-leaved China plant (C. sinensis sinensis) and the large-leaved Assam plant (C. sinensis assamica). Hybrids of these two varieties are also grown. The leaves may be fermented or left unfermented.

History Of The Tea Trade

According to legend tea has been known in China since about 2700 BCE. For millennia it was a medicinal beverage obtained by boiling fresh leaves in water, but around the 3rd century CE it became a daily drink, and tea cultivation and processing began. The first published account of methods of planting, processing, and drinking came in 350 CE. Around 800 the first seeds were brought to Japan, where cultivation became established by the 13th century. Chinese from Amoy brought tea cultivation to the island of Formosa (Taiwan) in 1810. Tea cultivation in Java began under the Dutch, who brought seeds from Japan in 1826 and seeds, workers, and implements from China in 1833.

In 1824 tea plants were discovered in the hills along the frontier between Burma and the Indian state of Assam. The British introduced tea culture into India in 1836 and into Ceylon (Sri Lanka) in 1867. At first they used seeds from China, but later seeds from the Assam plant were used.

The Dutch East India Company carried the first consignment of China tea to Europe in 1610. In 1669 the English East India Company brought China tea from ports in Java to the London market. Later, teas grown on British estates in India and Ceylon reached Mincing Lane, the centre of the tea trade in London. By the late 19th and early 20th centuries, tea growing had spread to Russian Georgia, Sumatra, and Iran and extended to non-Asian countries such as Natal, Malaŵi, Uganda, Kenya, Congo, Tanzania, and Mozambique in Africa, to Argentina, Brazil, and Peru in South America, and to Queensland in Australia.

Classification Of Teas

Teas are classified according to region of origin, as in China, Ceylon, Japanese, Indonesian, and African tea, or by smaller district, as in Darjeeling, Assam, and Nilgris from India, Uva and Dimbula from Sri Lanka, Keemun from Chi-men in China’s Anhwei Province, and Enshu from Japan.

Teas are also classified by the size of the processed leaf. Traditional operations result in larger leafy grades and smaller broken grades. The leafy grades are flowery pekoe (FP), orange pekoe (OP), pekoe (P), pekoe souchong (PS), and souchong (S). The broken grades are: broken orange pekoe (BOP), broken pekoe (BP), BOP fanning, fannings, and dust. Broken grades usually have substantial contributions from the more tender shoots, while leafy grades come mainly from the tougher and maturer leaves. In modern commercial grading, 95 to 100 percent of production belongs to broken grades, whereas earlier a substantial quantity of leafy grades was produced. This shift has been caused by an increased demand for teas of smaller particle size, which produce a quick, strong brew.

The most important classification is by the manufacturing process, resulting in the three categories of fermented (black), unfermented (green), and semifermented (oolong or pouchong). Green tea is usually produced from the China plant and is grown mostly in Japan, China, and to some extent Malaysia and Indonesia. The infused leaf is green, and the liquor is mild, pale green or lemon-yellow, and slightly bitter. Black tea, by far the most common type produced, is best made from Assam or hybrid plants. The infused leaf is bright red or copper coloured, and the liquor is bright red and slightly astringent but not bitter, bearing the characteristic aroma of tea. Oolong and pouchong teas are produced mostly in southern China and Taiwan from a special variety of the China plant. The liquor is pale or yellow in colour, as in green tea, and has a unique malty, or smoky, flavour.

Processing The Leaf

In tea manufacture, the leaf goes through some or all of the stages of withering, rolling, fermentation, and drying. The process has a twofold purpose: (1) to dry the leaf and (2) to allow the chemical constituents of the leaf to produce the quality peculiar to each type of tea.

The best-known constituent of tea is caffeine, which gives the beverage its stimulating character but contributes only a little to colour, flavour, and aroma. About 4 percent of the solids in fresh leaf is caffeine, and one teacup of the beverage contains 60 to 90 milligrams of caffeine. The most important chemicals in tea are the tannins, or polyphenols, which are colourless, bitter-tasting substances that give the drink its astringency. When acted upon by an enzyme called polyphenol oxidase, polyphenols acquire a reddish colour and form the flavouring compounds of the beverage. Certain volatile oils contribute to the aroma of tea, and also contributing to beverage quality are various sugars and amino acids.

Only black tea goes through all stages of the manufacturing process. Green tea and oolong acquire their qualities through variations in the crucial fermentation stage.

Black tea

Withering

Plucking the leaf initiates the withering stage, in which the leaf becomes flaccid and loses water until, from a fresh moisture content of 70 to 80 percent by weight, it arrives at a withered content of 55 to 70 percent, depending upon the type of processing.

In the traditional process, fresh leaf is spread by hand in thin layers onto trays or sections of coarse fabric called tats. It is then allowed to wither for 18 to 20 hours, depending upon several factors that include the temperature and humidity of the air and the size and moisture content of the leaf. Withering in the open air has been replaced by various mechanized systems. In trough withering, air is forced through a thick layer of leaf on a mesh in a trough. In drum withering, rotating, perforated drums are used instead of troughs, and in tunnel withering, leaf is spread on tats carried by mobile trolleys and is subjected to hot-air blasts in a tunnel. Continuous withering machines move the leaf on conveyor belts and subject it to hot air in an enclosed chamber, discharging withered leaf while fresh leaf is simultaneously loaded.

Mechanized systems greatly reduce withering time, but they can also lower the quality of the final product by reducing the time for chemical withering, during which proteins and carbohydrates break down into simpler amino acids and sugars, and the concentration of caffeine and polyphenols increases.

Rolling

At this stage, the withered leaf is distorted, acquiring the distinctive twist of the finished tea leaf, and leaf cells are burst, resulting in the mixing of enzymes with polyphenols.

The traditional method is to roll bunches of leaves between the hands, or by hand on a table, until the leaf is twisted, evenly coated with juices, and finally broken into pieces. Rolling machines consist of a circular table fitted in the centre with a cone and across the surface with slats called battens. A jacket, or bottomless circular box with a pressure cap, stands atop the table. Table and jacket rotate eccentrically in opposite directions, and the leaf placed in the jacket is twisted and rolled over the cone and battens in a fashion similar to hand rolling. Lumps of rolled leaf are then broken up and sifted. The smaller leaf passing through the sieve—called the fines—is transferred to the fermentation room, and the remaining coarse leaf is rolled again.

In many countries, rolling the leaf has been abandoned in favour of distortion by a variety of machines. In the Legg cutter (actually a tobacco-cutting machine), the leaf is forced through an aperture and cut into strips. The crushing, tearing, and curling (CTC) machine consists of two serrated metal rollers, placed close together and revolving at unequal speeds, which cut, tear, and twist the leaf. The Rotorvane consists of a horizontal barrel with a feed hopper at one end and a perforated plate at the other. Forced through the barrel by a screw-type rotating shaft fitted with vanes at the centre, the leaf is distorted by resistor plates on the inner surface of the barrel and is cut at the end plate. The nontraditional distorting machines can burst leaf cells so thoroughly that in many cases they render the withering stage unnecessary. However, unlike traditional rolling, they do not produce the larger leafy grades of tea.

Fermentation

Fermentation commences when leaf cells are broken during rolling and continues when the rolled leaf is spread on tables or perforated aluminum trays under controlled conditions of temperature, humidity, and aeration. The process actually is not fermentation at all but a series of chemical reactions. The most important is the oxidation by polyphenol oxidase of some polyphenols into compounds that combine with other polyphenols to form orange-red compounds called theaflavins. The theaflavins react with more units to form the thearubigins, which are responsible for the transformation of the leaf to a dark brown or coppery colour. The thearubigins also react with amino acids and sugars to form flavour compounds that may be partly lost if fermentation is prolonged. In general, theaflavin is associated with the brightness and brisk taste of brewed tea, while thearubigin is associated with strength and colour.

In traditional processing, optimum fermentation is reached after two to four hours. This time can be halved in fermenting leaf broken by the Legg cutter, CTC machine, and Rotorvane. In skip fermentation, the leaf is spread in aluminum skips, or boxes, with screened bottoms. Larger boxes are used in trough fermentation, and in continuous fermentation the leaf is spread on trays on a conveyor system. In all of these fermentation systems the leaf is aerated by forced air (oxygen being necessary for the action of the enzymes), and it is brought by automated conveyor to the dryer.

Drying

At this stage, heat inactivates the polyphenol enzymes and dries the leaf to a moisture content of about 3 percent. It also caramelizes sugars, thereby adding flavours to the finished product, and imparts the black colour associated with fermented tea.

Traditionally, fermented leaf was dried on large pans or screens over fire, but since the late 19th century, heated forced air has been used. A mechanized drier consists of a large chamber into the bottom of which hot air is blown as the leaf is fed from the top on a series of descending conveyors. The dried leaf is then cooled quickly to prevent overdrying and loss of quality. Modern innovations on the drier are the hot-feed drier, where hot air is supplied separately to the feeder to arrest fermentation immediately as the leaf is fed, and the fluid-bed drier, where the leaf moves from one end of the chamber to the other over a perforated plate in a liquid fashion.

Green tea

In preparing unfermented tea, the oxidizing enzymes are killed by steamblasting the freshly plucked leaf in perforated drums or by roasting it in hot iron pans prior to rolling. The leaf is then subjected to further heating and rolling until it turns dark green and takes a bluish tint. The leaves are finally dried to a moisture content of 3 to 4 percent and are either crushed into small pieces or ground to a powder.

With the inactivation of polyphenol oxidase, the polyphenols are not oxidized and therefore remain colourless, allowing the processed leaf to remain green. The absence of theaflavins and thearubigins in the finished leaf also gives the beverage a weaker flavour than black tea.

Oolong tea

After a brief withering stage, the leaf is lightly rolled by hand until it becomes red and fragrant. For oolong it is then fermented for about one-half, and for pouchong for one-quarter, of the time allowed for black tea. Fermentation is stopped by heating in iron pans, and the leaf is subjected to more rolling and heating until it is dried.

Packaging

Sorting and grading

The first step in packaging tea is grading it by particle size, shape, and cleanliness. This is carried out on mechanical sieves or sifters fitted with meshes of appropriate size. With small-sized teas in demand, some processed teas are broken or cut again at this stage to get a higher proportion of broken grades. Undesirable particles, such as pieces of tough stalk and fibre, are removed by hand or by mechanical extractor. Winnowing by air removes dust, fibres, and fluff.

Packing

Teas are packed in airtight containers in order to prevent absorption of moisture, which is the principal cause of loss of flavour during storage. Packing chests are usually constructed of plywood, lined with aluminum foil and paper, and sealed with the same material. Also used are corrugated cardboard boxes lined with aluminum foil and paper or paper sacks lined with plastic.

Blended teas are sold to consumers as loose tea, which is packed in corrugated paper cartons lined with aluminum foil, in metal tins, and in fancy packs such as metallized plastic sachets, or they are sold in tea bags made of special porous paper. Tea bags are mainly packed with broken-grade teas.

Instant tea

Instant teas are produced from black tea by extracting the liquor from processed leaves, tea wastes, or undried fermented leaves, concentrating the extract under low pressure, and drying the concentrate to a powder by freeze-drying, spray-drying, or vacuum-drying. Low temperatures are used to minimize loss of flavour and aroma. Instant green teas are produced by similar methods, but hot water is used to extract liquor from powdered leaves. Because all instant teas absorb moisture, they are stored in airtight containers or bottles.

Preparing The Beverage

Blending

Tea sold to the consumer is a blend of as many as 20 to 40 teas of different characteristics, from a variety of estates, and from more than one country. Price is an important factor, with cheap teas (called fillers) used to round off a blend and balance cost. Blends are often designed to be of good average character without outstanding quality, but distinctive blends—for example, with a flavour of seasonal Ceylon tea or the pungency and strength of Assam tea—are also made.

Brewing

A tea infusion is best made by pouring water just brought to the boil over dry tea in a warm teapot and steeping it from three to five minutes. The liquor is separated from the spent leaves and may be flavoured with milk, sugar, or lemon.

Tasting

Professional tasters, sampling tea for the trade, taste but do not consume a light brew in which the liquor is separated from the leaf after five to six minutes. The appearance of both the dry and infused leaf is observed, and the aroma of vapour, colour of liquor, and creaming action (formation of solids when cooled) are assessed. Finally the liquor is taken into the mouth with a sucking noise, swirled around the tongue, brought into contact with the palate, cheek, and gums, and then drawn to the back of the mouth and up to the olfactory nerve in the nose before being expectorated. The liquor is thus felt, tasted, and smelled. Tasters have a large glossary of terms for the evaluation of tea, but the less-demanding consumer drinks it as a thirst quencher and stimulant and for its distinctive sour-harsh taste.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#502 2019-09-13 15:31:55

- Monox D. I-Fly

- Member

- From: Indonesia

- Registered: 2015-12-02

- Posts: 2,000

Re: Miscellany

The most important chemicals in tea are the tannins, or polyphenols, which are colourless, bitter-tasting substances that give the drink its astringency.

I heard that tannin is good for combating snake venom...

Actually I never watch Star Wars and not interested in it anyway, but I choose a Yoda card as my avatar in honor of our great friend bobbym who has passed away.

May his adventurous soul rest in peace at heaven.

Offline

#503 2019-09-14 00:01:13

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,200

Re: Miscellany

ganesh wrote:The most important chemicals in tea are the tannins, or polyphenols, which are colourless, bitter-tasting substances that give the drink its astringency.

I heard that tannin is good for combating snake venom...

Is it so? I don't know.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#504 2019-09-15 00:20:07

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,200

Re: Miscellany

405) Camera

Camera, in photography, device for recording an image of an object on a light-sensitive surface; it is essentially a light-tight box with an aperture to admit light focused onto a sensitized film or plate.

Though there are many types of cameras, all include five indispensable components: (1) the camera box, which holds and protects the sensitive film from all light except that entering through the lens; (2) film, on which the image is recorded, a light-sensitive strip usually wound on a spool, either manually or automatically, as successive pictures are taken; (3) the light control, consisting of an aperture or diaphragm and a shutter, both often adjustable; (4) the lens, which focuses the light rays from the subject onto the film, creating the image, and which is usually adjustable by moving forward or back, changing the focus; and (5) the viewing system, which may be separate from the lens system (usually above it) or may operate through it by means of a mirror.

The earliest camera was the camera obscura, which was adapted to making a permanent image by Joseph Nicéphore Niepce and Louis-Jacques-Mandé Daguerre of France in the 1820s and 1830s. Many improvements followed in the 19th century, notably flexible film, developed and printed outside the camera. In the 20th century a variety of cameras was developed for many different purposes, including aerial photography, document copying, and scientific research.

Camera, lightproof box or container, usually fitted with a lens, which gathers incoming light and concentrates it so that it can be directed toward the film (in an optical camera) or the imaging device (in a digital camera) contained within. Today there are many different types of camera in use, all of them more or less sophisticated versions of the camera obscura, which dates back to antiquity. Nearly all of them are made up of the same basic parts: a body (the lightproof box), a lens and a shutter to control the amount of light reaching the light-sensitive surface, a viewfinder to frame the scene, and a focusing mechanism.

Still Cameras

Focusing and Composing the Scene

Except for pinhole cameras, which focus the image on the film through a tiny hole, all other cameras use a lens for focusing. The focal length of a lens, i.e., the distance between the rear of the lens (when focused on infinity) and the film (or imaging device), determines the angle of view and the size of objects as they appear on the imaging surface. The image is focused on that surface by adjusting the distance between the lens and the surface. In most 35-mm cameras (among the most widely used of modern optical cameras) and digital cameras this is done by rotating the lens, thus moving it closer to or farther from the film or imaging device. With twin-lens reflex and larger view cameras, the whole lens and the panel to which it is attached are moved toward or away from the film.

To view the subject for composing (and, usually, to help bring it into focus) nearly every camera has some kind of viewfinder. One of the simplest types, employed in most view cameras, is a screen that is placed on the back of the camera and replaced by the film in making the exposure. This time-consuming procedure is avoided in the modern 35-mm single-lens (and other) reflex cameras by placing the screen in a special housing on top of the camera. Inside the camera, in front of the film plane, there is a movable mirror that bounces the image from the lens to the screen for viewing and focusing, and then flips out of the way when the shutter is tripped, so that the image hits the film instead of the mirror. The mirror returns automatically to place after the exposure has been made. In rangefinder cameras the subject is generally viewed by means of two separate windows, one of which views the scene directly and the other of which contains an adjustable optical mirror device. When this device is adjusted by rotating the lens, the image entering through the lens can be brought into register, at the eyepiece, with the image from the direct view, thereby focusing the subject on the film. Digital cameras have an optical viewfinder, a liquid crystal display (LCD) screen, or both. Optical viewfinders are common in point-and-shoot cameras. An LCD screen allows the user see the photograph's content before the picture is taken and after, allowing the deletion of unwanted pictures.

Controlling the Light Entering the Camera

The speed of a lens is indicated by reference to its maximum opening, or aperture, through which light enters the camera. This aperture, or f-stop, is controlled by an iris diaphragm (a series of overlapping metal blades that form a circle with a hole in the center whose diameter can be increased or decreased as desired) inside the lens. The higher the f-stop number, the smaller the aperture, and vice versa.

A shutter controls the time during which light is permitted to enter the camera. There are two basic types of shutter, leaf-type and focal-plane. The leaf-type shutter employs a ring of overlapping metal blades similar to those of the iris diaphragm, which may be closed or opened to the desired degree. It is normally located between the lens elements but occasionally is placed behind or in front of the lens. The focal-plane shutter is located just in front of the film plane and has one or two cloth or metal curtains that travel vertically or horizontally across the film frame. By adjusting the shutter speed in conjunction with the width of aperture, the proper amount of light (determined by using a light meter and influenced by the relative sensitivity of the film being used) for a good exposure can be obtained.

Features of Modern Cameras

Most of today's 35-mm cameras, both rangefinder and reflex models, incorporate a rapid film-transport mechanism, lens interchangeability (whereby lenses of many focal lengths, such as wide-angle and telephoto, may be used with the same camera body), and a built-in light meter. Many also have an automatic exposure device whereby either the shutter speed or the aperture is regulated automatically (by means of a very sophisticated solid-state electronics system) to produce the "correct" exposure. Accessories include filters, which correct for deficiencies in film sensitivity; flash bulbs and flash mechanisms for supplying light; and monopods and tripods, for steady support.

Simple box cameras, including cameras of the Eastman Kodak Instamatic type, are fixed-focus cameras with limited or no control over exposure. Twin-lens reflex cameras use one lens solely for viewing, while the other focuses the image on the film. Also very popular are compact 35-mm rangefinder cameras; 126 cartridge cameras; and the subminiature cameras, including the 110 "pocket" variation of the Instamatic type and the Minox, which uses 9.5-mm film. Other categories in use include roll- and sheet-film single-lens reflex (SLR) cameras that use 120 and larger size films; self-processing Polaroid cameras; press cameras and view cameras that use 21/4 × 31/4 in., 4 × 5 in., 5 × 7 in., 8 × 10 in., and 11 × 14 in. film sizes; stereo cameras, the double slides from which require a special viewer; and various special types such as the super wide-angle and the panoramic cameras. (The numbers 110, 120, and 126 are film-size designations from the manufacturer and do not refer to actual measurements.) Digital cameras are essentially no different in operation but capture the image electronically rather than via a photographic emulsion.

The smaller, pocket-sized, automatic cameras of the Advanced Photo System (APS), introduced in 1996, are unique in that they are part of an integrated system. Using magnetic strips on the film to communicate with the photofinishing equipment, the camera can communicate shutter speed, aperture setting, and lighting conditions for each frame to the computerized photofinishing equipment, which can then compensate to avoid over- or underexposed photographic prints. Basic features of the APS cameras are drop-in loading, three print formats (classic, or 4 by 6 in.; hi vision, or 4 by 7 in.; and panoramic, or 4 by 11.5 in.) at the flick of a switch, and auto-focus, auto-exposure, "point-and-shoot" operation.

Digital cameras have several unique features. Resolution is made up of building blocks called pixels, one million of which are called a megapixel. Digital cameras have resolutions ranging from less than one megapixel to greater than seven megapixels. With more megapixels, more picture detail is captured, resulting in sharper, larger prints. Focus is a function of "zoom." Most digital cameras have an optical zoom, a digital zoom, or both. An optical zoom lens actually moves outward toward the subject to take sharp close-up photographs; this is the same kind of zoom lens found in traditional cameras. Digital zoom is a function of software inside the camera that crops the edges from a photograph and electronically enlarges the center portion of the image to fill the frame, resulting in a photograph with less detail. Some models also have a macro lens for close-ups of small, nearby objects. Storage of digital photographs may be in the camera's internal memory or in removable magnetic cards, sticks, or disks. These images can be transferred to a computer for viewing and editing or may be viewed on the camera's liquid crystal display. Digital cameras typically also have the ability to record video, but have less storage capacity and fewer video features than camcorders.

The marriage of microelectronics and digital technology led to the development of the camera phone, a cellular telephone that also has picture- (and video-) taking capability; smartphones, which integrate a range of applications into a cellphone, also typically include a camera. Some such phones can immediately send the picture to another camera phone or computer via the Internet or through the telephone network, offering the opportunity to take and share pictures in real time. Unlike the traditional camera, and to some extent the equivalent digital camera, which are used primarily for scheduled events or special occasions, the camera phone is available for impromptu or unanticipated photographic opportunities.

Motion Picture Cameras

The motion picture camera comes in a variety of sizes, from 8 mm to 35 mm and 75 mm. Motion picture film comes in spools or cartridges. The spool type, employed mostly in 16- and 35-mm camera systems, must be threaded through the camera and attached to the take-up spool by hand, whereas a film cartridge—available for the super-8-mm systems—avoids this procedure. In all modern movie cameras the film is driven by a tiny electric motor that is powered by batteries.

Motion picture cameras all operate on the same basic principles. Exposures are usually made at a rate of 18 or 24 frames per second (fps), which means that as the film goes through the camera it stops for a very brief moment to expose each frame. This is accomplished in nearly all movie cameras by a device called a rotary shutter—basically a half-circle of metal that spins, alternately opening and closing an aperture, behind which is located the film. To make the film travel along its path and hold still for the exposure of each frame, a device called a claw is required. This is another small piece of metal that alternately pops into the sprocket holes or perforations in the film, pulls the film down, retracts to release the film while the frame is being exposed, and finally returns to the top of the channel in which it moves to grasp the next frame. The movement of the shutter and claw are synchronized, so that the shutter is closed while the claw is pulling the frame downward and open for the instant that the frame is motionless in its own channel or gate.

Lenses for movie cameras also come in "normal," wide-angle, and long focal lengths. Some older cameras had a turret on which were mounted all three lens types. The desired lens could be fixed into position by simply rotating the turret. Many super-8 cameras come with a single zoom lens, incorporating a number of focal lengths that are controlled by moving a certain group of lens elements toward or away from the film. Most of these cameras have an automatic exposure device that regulates the f-stop according to the reading made by a built-in electric eye. Movie camera lenses are focused in the same way as are still camera lenses. For viewing purposes, a super-8 uses a beam splitter—a partially silvered reflector that diverts a small percentage of the light to a ground-glass viewfinder while allowing most of the light to reach the film. Other cameras have a mirror-shutter system that transmits all the light, at intervals, alternately to film and viewfinder. Many of the super-8 cameras also contain some kind of rangefinder, built into the focusing screen, for precise focusing.

Development of the Camera

The original concept of the camera dates from Grecian times, when Aristotle referred to the principle of the camera obscura [Lat.,=dark chamber] which was literally a dark box—sometimes large enough for the viewer to stand inside—with a small hole, or aperture, in one side. (A lens was not employed for focusing until the Middle Ages.) An inverted image of a scene was formed on an interior screen; it could then be traced by an artist. The first diagram of a camera obscura appeared in a manuscript by Leonardo da Vinci in 1519, but he did not claim its invention.

The recording of a negative image on a light-sensitive material was first achieved by the Frenchman Joseph Nicéphore Niepce in 1826; he coated a piece of paper with asphalt and exposed it inside the camera obscura for eight hours. Although various kinds of devices for making pictures in rapid succession had been employed as early as the 1860s, the first practical motion picture camera—made feasible by the invention of the first flexible (paper base) films—was built in 1887 by E. J. Marey, a Frenchman. Two years later Thomas Edison invented the first commercially successful camera. However, cinematography was not accessible to amateurs until 1923, when Eastman Kodak produced the first 16-mm reversal safety film, and Bell & Howell introduced cameras and projectors with which to use it. Systems using 8-mm film were introduced in 1923; super-8, with its smaller sprocket holes and larger frame size, appeared in 1965. A prototype of the the digital camera was developed in 1975 by Eastman Kodak, but digital cameras were not commercialized until the 1990s. Since then they have gradually superseded many film-based cameras, both for consumers and professionals, leading many manufacturers to eliminate or reduce the number of the film cameras they produce.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#505 2019-09-17 00:06:18

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,200

Re: Miscellany

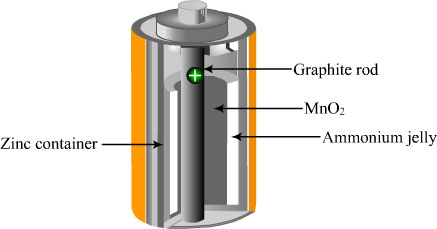

406) Button Cell

A watch battery or button cell is a small single cell battery shaped as a squat cylinder typically 5 to 25 mm (0.197 to 0.984 in) in diameter and 1 to 6 mm (0.039 to 0.236 in) high — resembling a button. A metal can forms the bottom body and positive terminal of the cell. An insulated top cap is the negative terminal.

Button cells are used to power small portable electronics devices such as wrist watches, pocket calculators, artificial cardiac pacemakers, implantable cardiac defibrillators, automobile keyless entry transmitters, and hearing aids. Wider variants are usually called coin cells. Devices using button cells are usually designed around a cell giving a long service life, typically well over a year in continuous use in a wristwatch. Most button cells have low self-discharge and hold their charge for a long time if not used. Relatively high-power devices such as hearing aids may use a zinc–air battery which have much higher capacity for a given size, but dry out after a few weeks even if not used.

Button cells are single cells, usually disposable primary cells. Common anode materials are zinc or lithium. Common cathode materials are manganese dioxide, silver oxide, carbon monofluoride, cupric oxide or oxygen from the air. Mercuric oxide button cells were formerly common, but are no longer available due to the toxicity and environmental effects of mercury.

Cells of different chemical composition made in the same size are mechanically interchangeable. However, the composition can affect service life and voltage stability. Using the wrong cell may lead to short life or improper operation (for example, light metering on a camera requires a stable voltage, and silver cells are usually specified). Sometimes different cells of the same type and size and specified capacity in milliampere-hour (mAh) are optimised for different loads by using different electrolytes, so that one may have longer service life than the other if supplying a relatively high current.

Button cells are very dangerous for small children. Button cells that are swallowed can cause severe internal burns and significant injury or death.

Properties of cell chemistries

Alkaline batteries are made in the same button sizes as the other types, but typically provide less capacity and less stable voltage than more costly silver oxide or lithium cells. They are often sold as watch batteries, and bought by people who do not know the difference.

Silver cells may have very stable output voltage until it suddenly drops very rapidly at end of life. This varies for individual types; one manufacturer (Energizer) offers three silver oxide cells of the same size, 357-303, 357-303H and EPX76, with capacities ranging from 150 to 200 mAh, voltage characteristics ranging from gradually reducing to fairly constant, and some stated to be for continuous low drain with high pulse on demand, others for photo use.

Mercury batteries also supply a stable voltage, but are now banned in many countries due to their toxicity and environmental impact.

Zinc-air batteries use air as the depolarizer and have much higher capacity than other types, as they take that air from the atmosphere. Cells have an air-tight seal which must be removed before use; cells will then dry out in a few weeks, regardless of use.

For comparison, the properties of some cells from one manufacturer of different types with diameter 11.6 mm and height 5.4 mm are listed:

• Silver: capacity 200 mAh to an end-point of 0.9 V, internal resistance 5–15 ohms, weight 2.3 g

• Alkaline (manganese dioxide): 150 mAh (0.9), 3–9 ohms, 2.4 g

• Mercury: 200 mAh, 2.6 g

• Zinc-air: 620 mAh, 1.9 g

Examining datasheets for a manufacturer's range may show a high-capacity alkaline cell with a capacity as high as one of the lower-capacity silver types; or a particular silver cell with twice the capacity of some particular alkaline cell. If the powered equipment requires a relatively high voltage (e.g., 1.3 V) to operate correctly, a silver cell with a flat discharge characteristic will give much longer service than an alkaline cell—even if it has the same specified capacity in mAh to an end-point of 0.9 V. If some device seems to "eat up" batteries after the original supplied by the manufacturer is replaced, it may be useful to check the device's requirements and the replacement battery's characteristics. For digital calipers, in particular, some are specified to require at least 1.25 V to operate, others 1.38 V.

While alkaline, silver oxide, and mercury batteries of the same size may be mechanically interchangeable in any given device, use of a cell of the right voltage but unsuitable characteristics can lead to short battery life or failure to operate equipment. Common lithium batteries, with a terminal voltage around 3 volts, are not made in sizes interchangeable with 1.5 volt cells. Use of a battery of significantly higher voltage than equipment is designed for can cause permanent damage.

Type designation

International standard IEC 60086-3 defines an alphanumeric coding system for "Watch batteries". Manufacturers often have their own naming system; for example, the cell called LR1154 by the IEC standard is named AG13, LR44, 357, A76, and other names by different manufacturers. The IEC standard and some others encode the case size so that the numeric part of the code is uniquely determined by the case size; other codes do not encode size directly.

Electrochemical system

For types with stable voltage falling precipitously at end-of-life (cliff-top voltage-versus-time graph), the end-voltage is the value at the "cliff-edge", after which the voltage drops extremely rapidly. For types which lose voltage gradually (slope graph, no cliff-edge) the end-point is the voltage beyond which further discharge will cause damage to either the battery or the device it is powering, typically 1.0 or 0.9 V.

Common names are conventional rather than uniquely descriptive; for example, a silver (oxide) cell has an alkaline electrolyte.

L, S, and C type cells are today the most commonly used types in quartz watches, calculators, small PDA devices, computer clocks, and blinky lights. Miniature zinc-air batteries – P type – are used in hearing aids and medical instruments. In the IEC system, larger cells may have no prefix for the chemical system, indicating they are zinc-carbon batteries; such types are not available in button cell format.

The second letter, R, indicates a round (cylindrical) form.

The standard only describes primary batteries. Rechargeable types made in the same case size will carry a different prefix not given in the IEC standard, for example some ML and LiR button cells use rechargeable lithium technology.

Package size

Package size of button batteries using standard names is indicated by a 2-digit code representing a standard case size, or a 3- or 4-digit code representing the cell diameter and height. The first one or two digits encode the outer diameter of the battery in whole millimeters, rounded down; exact diameters are specified by the standard, and there is no ambiguity; e.g., any cell with an initial 9 is 9.5 mm in diameter, no other value between 9.0 and 9.9 is used. The last two digits are the overall height in tenths of a millimeter.

Examples:

• CR2032: lithium, 20 mm diameter, 3.2 mm height

• CR2025: lithium, 20 mm diameter, 2.5 mm height

• SR516: silver, 5.8 mm diameter, 1.6 mm height

• LR1154/SR1154: alkaline/silver, 11.6 mm diameter, 5.4 mm height. The two-digit codes LR44/SR44 are often used for this size

Some coin cells, particularly lithium, are made with solder tabs for permanent installation, such as to power memory for configuration information of a device. The complete nomenclature will have prefixes and suffixes to indicate special terminal arrangements. For example, there is a plug-in and a solder-in CR2032, a plug-in and three solder-in BR2330s in addition to CR2330s, and many rechargeables in 2032, 2330, and other sizes.

Letter suffix

After the package code, the following additional letters may optionally appear in the type designation to indicate the electrolyte used:

• P: potassium hydroxide electrolyte

• S: sodium hydroxide electrolyte

• No letter: organic electrolyte

• W: the battery complies with all the requirements of the international IEC 60086-3 standard for watch batteries.

Other package markings

Apart from the type code described in the preceding section, watch batteries should also be marked with

• the name or trademark of the manufacturer or supplier;

• the polarity (+);

• the date of manufacturing.

Date codes

Often a 2-letter code (sometimes on the side of the battery) where the first letter identifies the manufacturer and the second is the year of manufacture. For example:

• YN – the letter N is the 14th letter in the alphabet – indicates the cell was manufactured in 2014.

There is no universal standard.

The manufacturing date can be abbreviated to the last digit of the year, followed by a digit or letter indicating the month, where O, Y, and Z are used for October, November and December, respectively (e.g., 01 = January 1990 or January 2000, 9Y = November 1999 or November 2009).

Common manufacturer code

A code used by some manufacturers is AG (alkaline) or SG (silver) followed by a number, where 1 equates to standard 621, 2 to 726, 3 to 736, 4 to 626, 5 to 754, 6 to 920 or 921, 7 to 926 or 927, 8 to 1120 or 1121, 9 to 936, 10 to 1130 or 1131, 11 to 721, 12 to 1142, and 13 to 1154. To those familiar with the chemical symbol for silver, Ag, this may suggest incorrectly that AG cells are silver.

Common applications

• Timekeeping

o Electric wristwatches, both digital and analogue

o Backup power for personal computer real time clocks

• Backup power for SRAM

o Backup power for personal computer BIOS configuration data

o Various video game cartridges or memory cards where battery-powered RAM is used to store data

o PCMCIA static RAM memory cards

• Lighting

o Laser pointers

o Small LED flashlights

o Solar/electric candles

o LED Bicycle head or tail lighting

o Red dot sights and electronic spotting scopes

• Pocket computers

o Calculators

o Small PDA devices

o Cyclocomputers

• Hearing aids

• Some remote controls, especially for keyless entry

• Various electronic toys (like Tamagotchi, Pokémon Pikachu or a Pokéwalker and other various digital pet devices)

• Battery-operated children's books

• Glucometers

• Security tokens

• Heart rate monitors

• Manual cameras with light meters

• LED throwies

• Digital thermometers

• Digital altimeter

• Electronic tuner for musical instruments

Rechargeable variants

In addition to disposable (single use) button cells, rechargeable batteries in many of the same sizes are available, with lower capacity than disposable cells. Disposable and rechargeable batteries are manufactured to fit into a holder or with solder tags for permanent connection. In equipment with a battery holder, disposable or rechargeable batteries may be used, if the voltage is compatible.

A typical use for a small rechargeable battery (in coin or other format) is to back up the settings of equipment which is normally permanently mains-powered, in the case of power failure. For example, many central heating controllers store operation times and similar information in volatile memory, lost in the case of power failure. It is usual for such systems to include a backup battery, either a disposable in a holder (current drain is extremely low and life is long) or a soldered-in rechargeable.

Rechargeable NiCd button cells were often components of the backup battery of older computers; non-rechargeable lithium button cells with a lifetime of several years are used in later equipment.

Chargeable batteries typically have the same dimension-based numeric code with different letters; thus CR2032 is a disposable battery while ML2032, VL2032 and LIR2032 are rechargeables that fit in the same holder if not fitted with solder tags. It is mechanically possible, though hazardous, to fit a disposable battery in a holder intended for a rechargeable; holders are fitted in parts of equipment only accessible by service personnel in such cases.

Health issues

In large metropolitan regions small children are directly impacted by the improper disposal of button cell type batteries, with Auckland in NZ getting about 20 cases per year requiring hospitalization.

Small children are likely to swallow button cells, which are somewhat visually similar to sweets, often causing fatalities. In Greater Manchester, England, with a population of 2,700,000, has had two children between 12 months and six years old that have died and five suffered life-changing injuries in the 18 months leading up to October 2014. In the United States, on average over 3,000 pediatric button batteries ingestions are reported each year with a trend toward major and fatal outcomes increasing. Coin cells of diameter 20 mm or greater cause the most serious injuries, even if dead or not crushed.

Mercury or cadmium

Some button cells contain mercury or cadmium, which are toxic. In early 2013 the European Parliament Environment Committee voted for a ban on the export and import of a range of mercury-containing products such as button cells and other batteries to be imposed from 2020.

Lithium

Lithium cells, if ingested, are highly dangerous. In the pediatric population, of particular concern is the potential for one of these batteries to get stuck in the oesophagus. Such impactions can rapidly devolve and cause severe tissue injury in as little as 2 hours. The damage is caused, not by the contents of the battery, but by the electric current that is created when the anode (negative) face of the battery comes in contact with the electrolyte-rich esophageal tissue. The surrounding water undergoes a hydrolysis reaction that produces a sodium hydroxide (caustic soda) build up near the battery's anode face. This results in the liquefactive necrosis of the tissue, a process whereby the tissue effectively is melted away by the alkaline solution. Severe complications can occur, such as erosion into nearby structures like the trachea or major blood vessels, the latter of which can cause fatal bleeds. While the only cure for an esophageal impaction is endoscopic removal, a recent study out of Children's Hospital of Philadelphia by Rachel R. Anfang and colleagues found that early and frequent ingestion of honey or sucralfate suspension prior to removal can reduce the injury severity to a significant degree. As a result of these findings, US-based National Capital Poison Center (Poison Control) updated its triage and treatment guideline for button battery ingestions to include the administration of honey and/or sucralfate as soon as possible after a known or suspected ingestion. Prevention efforts in the US by the National Button Battery Task force in cooperation with industry leaders have led to changes in packaging and battery compartment design in electronic devices to reduce a child's access to these batteries. However, there still is a lack of awareness across the general population and medical community to its dangers. Central Manchester University Hospital Trust warns that "a lot of doctors are unaware that this can cause harm".

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#506 2019-09-19 00:10:56

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,200

Re: Miscellany

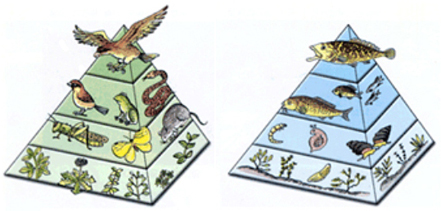

407) Food Chain

A food chain is a linear network of links in a food web starting from producer organisms (such as grass or trees which use radiation from the Sun to make their food) and ending at apex predator species (like grizzly bears or killer whales), detritivores (like earthworms or woodlice), or decomposer species (such as fungi or bacteria). A food chain also shows how the organisms are related with each other by the food they eat. Each level of a food chain represents a different trophic level. A food chain differs from a food web, because the complex network of different animals' feeding relations are aggregated and the chain only follows a direct, linear pathway of one animal at a time. Natural interconnections between food chains make it a food web. A common metric used to the quantify food web trophic structure is food chain length. In its simplest form, the length of a chain is the number of links between a trophic consumer and the base of the web and the mean chain length of an entire web is the arithmetic average of the lengths of all chains in a food web.

Many food webs have a keystone species (Such as Sharks) . A keystone species is a species that has a large impact on the surrounding environment and can directly affect the food chain. If this keystone species dies off it can set the entire food chain off balance. Keystone species keep herbivores from depleting all of the foliage in their environment and preventing a mass extinction.

Food chains were first introduced by the Arab scientist and philosopher Al-Jahiz in the 10th century and later popularized in a book published in 1927 by Charles Elton, which also introduced the food web concept.

Food chain length

The food chain's length is a continuous variable that provides a measure of the passage of energy and an index of ecological structure that increases in value counting progressively through the linkages in a linear fashion from the lowest to the highest trophic (feeding) levels.

Food chains are often used in ecological modeling (such as a three species food chain). They are simplified abstractions of real food webs, but complex in their dynamics and mathematical implications.

Ecologists have formulated and tested hypotheses regarding the nature of ecological patterns associated with food chain length, such as increasing length increasing with ecosystem size, reduction of energy at each successive level, or the proposition that long food chain lengths are unstable. Food chain studies have an important role in ecotoxicology studies tracing the pathways and biomagnification of environmental contaminants.

Producers, such as plants, are organisms that utilize solar or chemical energy to synthesize starch. All food chains must start with a producer. In the deep sea, food chains centered on hydrothermal vents and cold seeps exist in the absence of sunlight. Chemosynthetic bacteria and archaea use hydrogen sulfide and methane from hydrothermal vents and cold seeps as an energy source (just as plants use sunlight) to produce carbohydrates; they form the base of the food chain. Consumers are organisms that eat other organisms. All organisms in a food chain, except the first organism, are consumers.

In a food chain, there is also reliable energy transfer through each stage. However, all the energy at one stage of the chain is not absorbed by the organism at the next stage. The amount of energy from one stage to another decreases.

It appears to me that if one wants to make progress in mathematics, one should study the masters and not the pupils. - Niels Henrik Abel.

Nothing is better than reading and gaining more and more knowledge - Stephen William Hawking.

Offline

#507 2019-09-21 00:12:17

- Jai Ganesh

- Administrator

- Registered: 2005-06-28

- Posts: 52,200

Re: Miscellany

408) Lighthouse

Lighthouse, structure, usually with a tower, built onshore or on the seabed to serve as an aid to maritime coastal navigation, warning mariners of hazards, establishing their position, and guiding them to their destinations. From the sea a lighthouse may be identified by the distinctive shape or colour of its structure, by the colour or flash pattern of its light, or by the coded pattern of its radio signal. The development of electronic navigation systems has had a great effect on the role of lighthouses. Powerful lights are becoming superfluous, especially for landfall, but there has been a significant increase in minor lights and lighted buoys, which are still necessary to guide the navigator through busy and often tortuous coastal waters and harbour approaches. Among mariners there is still a natural preference for the reassurance of visual navigation, and lighted marks also have the advantages of simplicity, reliability, and low cost. In addition, they can be used by vessels with no special equipment on board, providing the ultimate backup against the failure of more sophisticated systems.

History Of Lighthouses

Lighthouses of antiquity

The forerunners of lighthouses proper were beacon fires kindled on hilltops, the earliest references to which are contained in the Iliad and the Odyssey (c. 8th century BCE). The first authenticated lighthouse was the renowned Pharos of Alexandria, which stood some 350 feet (about 110 metres) high. The Romans erected many lighthouse towers in the course of expanding their empire, and by 400 CE there were some 30 in service from the Black Sea to the Atlantic. These included a famous lighthouse at Ostia, the port of Rome, completed in 50 CE, and lighthouses at Boulogne, France, and Dover, England. A fragment of the original Roman lighthouse at Dover still survives.

The Phoenicians, trading from the Mediterranean to Great Britain, marked their route with lighthouses. These early lighthouses had wood fires or torches burning in the open, sometimes protected by a roof. After the 1st century CE, candles or oil lamps were used in lanterns with panes of glass or horn.

Medieval lighthouses

The decline of commerce in the Dark Ages halted lighthouse construction until the revival of trade in Europe about 1100 CE. The lead in establishing new lighthouses was taken by Italy and France. By 1500, references to lighthouses became a regular feature of books of travel and charts. By 1600, at least 30 major beacons existed.

These early lights were similar to those of antiquity, burning mainly wood, coal, or torches in the open, although oil lamps and candles were also used. A famous lighthouse of this period was the Lanterna of Genoa in Italy, probably established about 1139. It was rebuilt completely in 1544 as the impressive tower that remains a conspicuousseamark today. The keeper of the light in 1449 was Antonio Columbo, uncle of the Columbus who crossed the Atlantic. Another early lighthouse was built at Meloria, Italy, in 1157, which was replaced in 1304 by a lighthouse on an isolated rock at Livorno. In France the Roman tower at Boulogne was repaired by the emperor Charlemagne in 800. It lasted until 1644, when it collapsed owing to undermining of the cliff. The most famous French lighthouse of this period was one on the small island of Cordouan in the estuary of the Gironde River near Bordeaux. The original was built by Edward the Black Prince in the 14th century. In 1584 Louis de Foix, an engineer and architect, undertook the construction of a new light, which was one of the most ambitious and magnificent achievements of its day. It was 135 feet in diameter at the base and 100 feet high, with an elaborate interior of vaulted rooms, richly decorated throughout with a profusion of gilt, carved statuary, and arched doorways. It took 27 years to build, owing to subsidence of the apparently substantial island. By the time the tower was completed in 1611, the island was completely submerged at high water. Cordouan thus became the first lighthouse to be built in the open sea, the true forerunner of such rock structures as the Eddystone Lighthouse.

The influence of the Hanseatic League helped increase the number of lighthouses along the Scandinavian and German coasts. At least 15 lights were established by 1600, making it one of the best-lighted areas of that time.

During this period, lights exhibited from chapels and churches on the coast frequently substituted for lighthouses proper, particularly in Great Britain.

The beginning of the modern era

The development of modern lighthouses can be said to have started about 1700, when improvements in structures and lighting equipment began to appear more rapidly. In particular, that century saw the first construction of towers fully exposed to the open sea. The first of these was Henry Winstanley’s 120-foot-high wooden tower on the notorious Eddystone Rocks off Plymouth, England. Although anchored by 12 iron stanchions laboriously grouted into exceptionally hard red rock, it lasted only from 1699 to 1703, when it was swept away without a trace in a storm of exceptional severity; its designer and builder, in the lighthouse at the time, perished with it. It was followed in 1708 by a second wooden tower, constructed by John Rudyerd, which was destroyed by fire in 1755. Rudyerd’s lighthouse was followed by John Smeaton’s famous masonry tower in 1759. Smeaton, a professional engineer, embodied an important new principle in its construction whereby masonry blocks were dovetailed together in an interlocking pattern. Despite the dovetailing feature, the tower largely relied on its own weight for stability—a principle that required it to be larger at the base and tapered toward the top. Instead of a straight conical taper, though, Smeaton gave the structure a curved profile. Not only was the curve visually attractive, but it also served to dissipate some of the energy of wave impact by directing the waves to sweep up the walls.

Owing to the undermining of the foundation rock, Smeaton’s tower had to be replaced in 1882 by the present lighthouse, constructed on an adjacent part of the rocks by Sir James N. Douglass, engineer-in-chief of Trinity House. In order to reduce the tendency of waves to break over the lantern during severe storms (a problem often encountered with Smeaton’s tower), Douglass had the new tower built on a massive cylindrical base that absorbed some of the energy of incoming seas. The upper portion of Smeaton’s lighthouse was dismantled and rebuilt on Plymouth Hoe, where it still stands as a monument; the lower portion or “stump” can still be seen on the Eddystone Rocks.

Following the Eddystone, masonry towers were erected in similar open-sea sites, which include the Smalls, off the Welsh coast; Bell Rockin Scotland; South Rock in Ireland; and Minots Ledge off Boston, Massachusetts, U.S. The first lighthouse of the North American continent, built in 1716, was on the island of Little Brewster, also off Boston. By 1820 there were an estimated 250 major lighthouses in the world.

Modern Lighthouses

Construction

While masonry and brick continue to be employed in lighthouse construction, concrete and steel are the most widely used materials. Structurally well suited and reasonably cheap, concrete especially lends itself to aesthetically pleasing designs for shore-based lighthouses.

Modern construction methods have considerably facilitated the building of lighthouses in the open sea. On soft ground, the submerged caisson method is used, a system applied first in 1885 to the building of the Roter Sand Lighthouse in the estuary of the Weser River in Germany and then to the Fourteen Foot Bank light in the Delaware Bay, U.S. With this method, a steel caisson or open-ended cylinder, perhaps 40 feet in diameter, is positioned on the seabed. By excavation of sand, it is sunk into the seabed to a depth of possibly 50 feet. At the same time, extra sections are added to the top as necessary so that it remains above high water level. The caisson is finally pumped dry and filled with concrete to form a solid base on which the lighthouse proper is built.

Where the seabed is suitable, it is possible to build a “float out” lighthouse, consisting of a cylindrical tower on a large concrete base that can be 50 feet in diameter. The tower is constructed in a shore berth, towed out to position, and then sunk to the seabed, where the base is finally filled with sand. Weighing 5,000 tons (4.5 million kilograms) or more, these towers rely on their weight for stability and require a leveled, prepared seabed. For greater stability during towing, the cylindrical tower itself often consists of two or more telescopic sections, raised to full height by hydraulic jacks after being founded on the seabed. This design was pioneered largely in Sweden.

Another design, which is more independent of seabed conditions, is the conventional steel-piled structure used for offshore gas and oil rigs. Piles may be driven as deep as 150 feet into the seabed, depending on the underlying strata. The United States has built about 15 light towers of this type, one prominent example being Ambrose Light off New York.

Helicopters are widely employed in the servicing and maintenance of offshore towers, so that modern designs normally include a helipad. In fact, older cylindrical masonry structures of the previous era—including the Eddystone tower—have had helipads fitted above their lanterns.

Illuminants

Wood fires were not discontinued until 1800, though after about 1550 coal, a more compact and longer-burning fuel, was increasingly favoured, particularly in northwestern Europe. A lighthouse in those days could consume 300 tons or more of coal a year. In full blaze, the coal fire was far superior to other forms of lighting, preferred by mariners to oil or candles. The disadvantage of both coal fires and early oil lamps and candles was the prodigious amount of smoke produced, which resulted in rapid blackening of the lantern panes, obscuring the light.

Oil lamps

In 1782 a Swiss scientist, Aimé Argand, invented an oil lamp whose steady smokeless flame revolutionized lighthouse illumination. The basis of his invention was a circular wick with a glass chimney that ensured an adequate current of air up the centre and the outside of the wick for even and proper combustion of the oil. Eventually, Argand burners with as many as 10 concentric wicks were designed. These lamps originally burned fish oil, later vegetable oil, and by 1860 mineral oil. The Argand burner became the principal lighthouse illuminant for more than 100 years.

In 1901 the Briton Arthur Kitson invented the vaporized oil burner, which was subsequently improved by David Hood of Trinity House and others. This burner utilized kerosene vaporized under pressure, mixed with air, and burned to heat an incandescent mantle. The effect of the vaporized oil burner was to increase by six times the power of former oil wick lights. (The principle is still widely used for such utensils as camp stoves and pressure lamps.)

Gas lamps

Early proposals to use coal gas at lighthouses did not meet with great success. A gasification plant at the site was usually impracticable, and most of the lights were too remote for a piped supply. However, acetylene gas, generated in situ from calcium carbide and water, came into use around the turn of the 20th century, and its use increased following the introduction of the dissolved acetylene process, which by dissolving the acetylene in acetone made it safe to compress for storage.

Acetylene gas as a lighthouse illuminant had a profound influence on the advancement of lighthouse technology, mainly through the work of Gustaf Dalén of Sweden, who pioneered its application between 1900 and 1910. Burned either as an open flame or mixed with air in an incandescent mantle, acetylene produced a light equal to that of oil. Its great advantage was that it could be readily controlled; thus, for the first time automatic unattended lights were possible. Dalén devised many ingenious mechanisms and burners, operating from the pressure of the gas itself, to exploit the use of acetylene. Most of the equipment he designed is still in general use today. One device is an automatic mantle exchanger that brings a fresh mantle into use when the previous one burns out. Another, economizing on gas, was the “sun valve,” an automatic day-night switch capable of extinguishing the light during the day. The switch utilized the difference in heat-absorbing properties between a dull black surface and a highly polished one, producing a differential expansion arranged by suitable mechanical linkage to control the main gas valve.

The acetylene system facilitated the establishment of many automatic unattended lighthouses in remote and inaccessible locations, normally requiring only an annual visit to replenish the storage cylinders and overhaul the mechanism. Liquefied petroleum gas, such as propane, has also found use as an illuminant, although both oil and gas lamps have largely been superseded by electricity.

Electric lamps

Electric illumination in the form of carbon arc lamps was first employed at lighthouses at an early date, even while oil lamps were still in vogue. The first of these was at South Foreland, England, in 1858, followed by a number of others. The majority of these, however, were eventually converted to oil, since the early arc lamps were difficult to control and costly to operate. In 1913 the Helgoland Lighthouse in the North Sea off Germany was equipped with arc lamps and searchlight mirrors to give a light of 38 million candlepower, the most powerful lighthouse in the world at that time.

The electric-filament lamp, which came into general use in the 1920s, is now the standard illuminant. Power output ranges from about 1,500 watts for the largest structures down to about 5 watts for buoys and minor beacons. Most lamps are of the tungsten-halogen type for better efficiency and longer life. As new types of electric lamps become available—for example, compact source discharge tube lamps—they are adopted for lighthouse use wherever suitable.

Optical equipment

Paraboloidal mirrors

With the advent of the Argand burner, a reliable and steady illuminant, it became possible to develop effective optical apparatuses for increasing the intensity of the light. In the first equipment of this type, known as the catoptric system, paraboloidal reflectors concentrated the light into a beam. In 1777 William Hutchinson of Liverpool, England, produced the first practical mirrors for lighthouses, consisting of a large number of small facets of silvered glass set in a plaster cast molded to a paraboloid form. More generally, shaped metal reflectors were used, silvered or highly polished. These were prone, however, to rapid deterioration from heat and corrosion; the glass facet reflector, although not as efficient, lasted longer. The best metallic reflectors available in 1820 were constructed of heavily silvered copper in the proportion of 6 ounces of silver to 16 ounces of copper (compared with the 0.5 ounce of silver to 16 ounces of copper commonly used for plated tableware of the period). With such heavy plating, cleaning cloths were kept for subsequent recovery of the silver. These mirrors could increase the intensity of an Argand burner, nominally about five candlepower, almost 400 times.

Although the mirror could effectively concentrate the light into an intense beam, it was necessary to rotate it to make it visible from any direction. This produced the now familiar revolving lighthouse beam, with the light appearing as a series of flashes. Mariners were not favourably disposed to these early flashing lights, contending that a fixed steady light was essential for a satisfactory bearing. However, the greatly increased intensity and the advantage of using a pattern of flashes to identify the light gradually overcame their objections. The first revolving-beam lighthouse was at Carlsten, near Marstrand, Sweden, in 1781.

Rectangular and drum lenses

In 1821 Augustin Fresnel of France produced the first apparatus using the refracting properties of glass, now known as the dioptric system, or Fresnel lens. On a lens panel he surrounded a central bull’s-eye lens with a series of concentric glass prismatic rings. The panel collected light emitted by the lamp over a wide horizontal angle and also the light that would otherwise escape to the sky or to the sea, concentrating it into a narrow, horizontal pencil beam. With a number of lens panels rotating around the lamp, he was then able in 1824 to produce several revolving beams from a single light source, an improvement over the mirror that produces only a single beam. To collect more of the light wasted vertically, he added triangular prism sections above and below the main lens, which both refracted and reflected the light. By doing this he considerably steepened the angle of incidence at which rays shining up and down could be collected and made to emerge horizontally. Thus emerged the full Fresnel catadioptric system, the basis of all lighthouse lens systems today. To meet the requirement for a fixed all-around light, in 1836 English glassmaker William Cookson modified Fresnel’s principle by producing a cylindrical drum lens, which concentrated the light into an all-around fan beam. Although not as efficient as the rectangular panel, it provided a steady, all-around light. Small drum lenses, robust and compact, are widely used today for buoy and beacon work, eliminating the complication of a rotating mechanism; instead of revolving, their lights are flashed on and off by an electronic code unit.

Prior to Fresnel’s invention the best mirror systems could produce a light of about 20,000 candlepower with an Argand burner. The Fresnel lens system increased this to 80,000 candlepower, roughly equivalent to a modern automobile headlamp; with the pressure oil burner, intensities of up to 1,000,000 candlepower could be achieved. For a light of this order, the burner mantle would measure 4 inches (100 millimetres) in diameter. The rotating lens system would have four large Fresnel glass lens panels, 12 feet high, mounted about four feet from the burner on a revolving lens carriage. The lens carriage would probably weigh five tons, about half of it being the weight of the glass alone. The rotating turntable would float in a circular cast-iron trough containing mercury. With this virtually frictionless support bearing, the entire assembly could be smoothly rotated by weight-driven clockwork. If the illuminant was acetylene gas, the lens rotation could be driven by gas pressure.

Installations of this type are still in common use, although many have been converted to electric lamps with electric-motor drives. Modern lens equipment of the same type is much smaller, perhaps 30 inches (75 centimetres) high, mounted on ball bearings and driven by an electric motor. With a 250-watt lamp, illumination of several hundred thousand candlepower can be readily obtained. Lens panels can be molded in transparent plastic, which is lighter and cheaper. Drum lenses are also molded in plastic. In addition, with modern techniques, high-quality mirrors can be produced easily and cheaply.

Intensity, visibility, and character of lights

Geographic range and luminous range

The luminous intensity of a light, or its candlepower, is expressed in international units called candelas. Intensities of lighthouse beams can vary from thousands to millions of candelas. The range at which a light can be seen depends upon atmospheric conditions and elevation. Since the geographic horizon is limited by the curvature of the Earth, it can be readily calculated for any elevation by standard geometric methods. In lighthouse work the observer is always assumed to be at a height of 15 feet, although on large ships he may be 40 feet above the sea. Assuming a light at a height of 100 feet, the range to an observer at 15 feet above the horizon will be about 16 nautical miles. This is known as the geographic range of the light. (One nautical mile, the distance on the Earth’s surface traversed by one minute of arc longitude or latitude, is equivalent to 1.15 statute miles or 1.85 kilometres.)

The luminous range of a light is the limiting range at which the light is visible under prevailing atmospheric conditions and disregarding limitations caused by its height and the Earth’s curvature. A very powerful light, low in position, can thus have a clear-weather luminous range greater than that when first seen by the mariner on the horizon. Powerful lights can usually be seen over the horizon because the light is scattered upward by particles of water vapour in the atmosphere; this phenomenon is known as the loom of the light.

Atmospheric conditions have a marked effect on the luminous range of lights. They are defined in terms of a transmission factor, which is expressed as a percentage up to a maximum of 100 percent (representing a perfectly clear atmosphere, never attained in practice). Clear weather in the British Isles corresponds to about 80 percent transmission, but in tropical regions it can rise to 90 percent, increasing the luminous range of a 10,000-candela light from 18 to 28 nautical miles. Conversely, in mist or haze at about 60 percent transmission, a light of 1,000,000 candelas would be necessary to maintain a luminous range of 18 nautical miles. In dense fog, with visibility down to 100 yards or metres, a light of 10,000,000,000 candelas could scarcely be seen at half a nautical mile. Because average clear-weather conditions vary considerably from one region of the world to another, luminous ranges of all lighthouses by international agreement are quoted in an arbitrary standard clear-weather condition corresponding to a daytime meteorological visibility of 10 nautical miles, or 74 percent transmission. This is known as the nominal range of a light. Mariners use conversion tables to determine the actual luminous range in the prevailing visibility.

Because lights of very great intensity yield diminishing returns in operational effectiveness, most very high-powered lights have been abandoned. A maximum of 100,000 candelas, with a clear-weather range of 20 nautical miles, is generally considered adequate. Nevertheless, there are still some very high-powered lights, which for special reasons may have to be visible at a distance in daylight.

Identification

Most lighthouses rhythmically flash or eclipse their lights to provide an identification signal. The particular pattern of flashes or eclipses is known as the character of the light, and the interval at which it repeats itself is called the period. The number of different characters that can be used is restricted by international agreement through the International Association of Lighthouse Authorities in Paris, to which the majority of maritime nations belong. The regulations are too lengthy to quote in full, but essentially a lighthouse may display a single flash, regularly repeated at perhaps 5-, 10-, or 15-second intervals. This is known as a flashing light. Alternatively, it may exhibit groups of two, three, or four flashes, with a short eclipse between individual flashes and a long eclipse of several seconds between successive groups. The whole pattern is repeated at regular intervals of 10 or 20 seconds. These are known as group-flashing lights. In another category, “occulting” lights are normally on and momentarily extinguished, with short eclipses interrupting longer periods of light. Analogous to the flashing mode are occulting and group-occulting characters. A special class of light is the isophase, which alternates eclipses and flashes of exactly equal duration.

Steadily burning lights are called fixed lights. For giving mariners accurate directional information in ports, harbours, and estuarial approaches, fixed directional lights display sharply defined red and green sectors. Another sensitive and very accurate method of giving directional instruction is by range lights, which are two fixed lights of different elevation located about half a nautical mile apart. The navigator steers the vessel to keep the two lights aligned one above the other. Laser lights are also employed in this role.

Another use for fixed lights is the control of shipping at harbourentrances. A traffic signal consists of a vertical column of high-powered red, green, and yellow projector lights that are visible in daylight.

The daymark requirement of a lighthouse is also important; lighthouse structures are painted to stand out against the prevailing background. Shore lighthouses are usually painted white for this purpose, but in the open sea or against a light background conspicuous bands of contrasting colours, usually red or black, are utilized.

Sound signals

The limitations of purely visual navigation very early led to the idea of supplementary audible warning in lighthouses. The first sound signals were explosive. At first cannon were used, and later explosive charges were attached to retractable booms above the lantern and detonated electrically. Sometimes the charges contained magnesium in order to provide an accompanying bright flare. Such signals could be heard up to four nautical miles away. Bells also were used, the striker being actuated by weight-driven clockwork or by a piston driven by compressed gas (usually carbon dioxide). Some bells were very large, weighing up to one ton.

Compressed air

About the beginning of the 20th century, compressed air fog signals, which sounded a series of blasts, were developed. The most widely used were the siren and the diaphone. The siren consisted of a slotted rotor revolving inside a slotted stator that was located at the throat of a horn. The diaphone worked on the same principle but used a slotted piston reciprocating in a cylinder with matching ports. The largest diaphones could be heard under good conditions up to eight nautical miles away. Operating pressures were at 2 to 3 bars (200 to 300 kilopascals), and a large diaphone could consume more than 50 cubic feet (approximately 1.5 cubic metres) of air per second. This required a large and powerful compressing plant, 50 horsepower or more, with associated air-storage tanks.

A later compressed-air signal was the tyfon. Employing a metal diaphragm vibrated by differential air pressure, it was more compact and efficient than its predecessors.

Electricity