Math Is Fun Forum

You are not logged in.

- Topics: Active | Unanswered

#26 Re: Guestbook » hey » 2007-04-26 07:28:55

Are you learning about simplicial complexes for homology theory or for their own sake? And you don't have to answer this question if you find it too personal, but what do you do for a living, Jane?

#27 Re: Puzzles and Games » Janes puzzles » 2007-04-25 09:37:21

I'll be back later for more... class time.

#28 Re: Help Me ! » Matrix » 2007-04-23 20:44:50

No. Consider

and

They are both invertible (they are their own inverses), but I + (-I) = O, and we know the zero matrix is not invertible. Kind of a simple example, but it works.

#29 Re: Help Me ! » algebra 2 help » 2007-04-23 14:41:01

Set the polynomials equal to each other and bring everything to one side, then factor:

P(x) = Q(x)

x³-3x²+2 = x²-6x+11

x³ - 4x² + 6x - 9 = 0

(x - 3)(x² - x + 3) = 0

This gives x = 3 (x² - x + 3 has no real zeros).

#30 Re: Help Me ! » Matrix » 2007-04-23 11:21:18

i've never understood why this is so in the first place, i would have though it simple to infer that since AB =/= BA, you would have automaticly that (AB)C =/= A(BC), but ofcourse, its not the case.

What does the noncommutativity of matrix multiplication have to do with the associativity? BA doesn't show up in (AB)C = A(BC).

The definition of associativity is that the order of operations does not matter, as long as the order of operands doesn't change. From this it follows easily that (AB)CD = A(BC)D = AB(CD) = (ABC)D = A(BCD); we can multiply in any order (A and B first, or B and C first, etc.), but we cannot reorder the operands (A, B, C, and D).

If you really don't believe it, take the product of r matrices A[sub]k[/sub] (where each A[sub]k[/sub] is of dimension n[sub]k[/sub] × n[sub]k + 1[/sub]), denoting the elements of A[sub]k[/sub] as

, and write out what it would be:If we take, for example,

, this sum becomesby the associativity of scalar multiplication (which I am sure you do not dispute), and similarly for other placings of parentheses (they will all reduce to parentheses around the scalar elements in the sum, which can be moved as removed as you please).

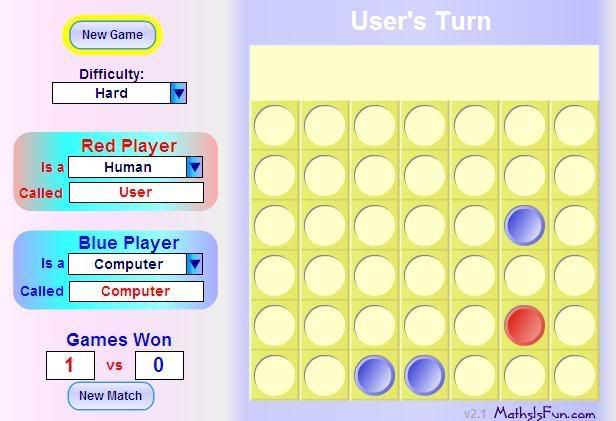

#31 Re: Maths Is Fun - Suggestions and Comments » Four In A Line Flash Version » 2007-04-22 15:28:43

In the middle of the game Time ≈ 5000 for me.

#32 Re: Help Me ! » show that » 2007-04-21 07:55:21

Haha, I looked at that kind of funny when you wrote it, and wondered if I had helped at all when you arrived at a trivially obvious conclusion. Good work, team.

#33 Re: Help Me ! » Algebra equation problem » 2007-04-21 07:50:04

First of all, I think the poster is in an algebra class, not a linear algebra class. Here is how you would solve this problem in an algebra class:

We have the equations 4x + 7y = 10, 2x + 3y = 3. If we multiply the second equation by -2 (which makes it -4x -6y = -6) and add it to the first, look what happens!

4x + 7y = 10

+(-4x - 6y = -6)

y = 4.

The x's cancelled, and now we know what y is. We can then put y = 4 into one of our equations to find x. Let's put it in the second equation:

2x + 3y = 3

2x + 3(4) = 3

2x + 12 = 3

2x = -9

x = -9/2.

So the solution is x = -9/2, y = 4.

And yes, it will usually take you n times as long to invert an n×n matrix as opposed to using row reduction. Even in the case of a 2×2 matrix, row reducing will only take one or two simple steps (scaling a row and adding basically), while using inverses requires you to calculate the inverse (sure it's a simple formula) and then multiply this inverse on the right by the b vector. It's a little more work than just adding rows. It's common to run into singular matrices in homework assignments as well.

#34 Re: Help Me ! » show that » 2007-04-20 09:49:07

Hessian is like the Jacobian (without further qualification they are determinants, we can also consider the Hessian or Jacobian matrix, which are useful as the matrix of certain transformations, such as change of coordinates), but its elements are second order derivatives. The Hessian is defined as

Suppose a = (a[sub]1[/sub], ..., a[sub]n[/sub]) is a critical point of f. Then the following hold:

In more advanced terms, if the Hessian matrix is positive definite at a, then a is a local minimum for f, if the Hessian matrix is negative definite at a, then a is a local maximum for f, and if the Hessian matrix has both positive and negative eigenvalues at a, then a is a saddle point of f. This is basically the second derivative test for functions of more than one variable. Note that this second derivative test may sometimes be inconclusive.

#35 Re: Help Me ! » derivative » 2007-04-20 09:27:04

Imagine that a mother function f(x) is pregnant with her baby function g(x). Then the mother function looks like f(g(x)). Then one day the mother function goes into labor, so at the hospital Doctor Derivative needs to differentiate her to help her give birth. First he differentiates the mother f(x), and then the baby g(x) comes out differentiated as well. The end result looks like this: f'(g(x))g'(x). This process is known as the chain rule.

In other words, (f(g(x)))' = f'(g(x))g'(x), or

#36 Re: Help Me ! » FreeCell » 2007-04-18 19:42:40

Actually there are some unsolvable games (although they are a "set of measure zero"). Here is a proof. ![]()

#37 Re: Help Me ! » confuse » 2007-04-18 13:27:06

Consider S = (1, 2) ∪ (3, 4), for example. Clearly (1, 2) is not a subset of (3, 4) and vice versa. So S has two component intervals, (1, 2) and (3, 4). Then a set S can have more than one component interval. Is this clear?

#38 Re: This is Cool » Six Dimensional Universe? » 2007-04-18 04:59:14

Yeah, it's probably somewhat intense. But in our class we've talked about topics mentioned in the news article, and professors love to talk about their research interests, so I'm sure I can make an office visit to explain anything I don't understand. But that's only if I get to actually see the paper. Oh well. The concept of extra time-like dimensions is certainly interesting.

#39 Re: This is Cool » Six Dimensional Universe? » 2007-04-17 20:11:06

Darn, can't access the actual paper for free. I'd be interested in seeing it. I'll ask my advisor if he has access to it, he's big on mathematical physics like this.

#40 Re: Help Me ! » Integration Help » 2007-04-17 07:24:31

You're almost right... but don't forget your constant of integration!

Depending on your teacher you might lose a point or two for leaving that out. ![]() Do you know how to do partial fractions?

Do you know how to do partial fractions?

#41 Re: Euler Avenue » Vector spaces. » 2007-04-17 07:20:18

Just a concern; when you say that

you are assuming an orthonormal basis in cartesian coordinates (where the metric tensor is just the identity, ). Perhaps you should make this assumption clearer. This is certainly fine in an introduction, as the inner product is given as usually when first introduced (at that stage usually you are not worried about vector spaces which use other inner products). Overall I just feel that the inner product section is a bit shaky. For example, you never tell the reader that even though you substitute it into your formula out of nowhere.#42 Re: Dark Discussions at Cafe Infinity » shooting at Blacksburg Virginia today: Worst in US history » 2007-04-16 11:02:55

I saw on Facebook that you are ok, Ricky. That is good news. Hopefully all of your friends are fine as well.

#43 Re: Maths Is Fun - Suggestions and Comments » Four In A Line Flash Version » 2007-04-13 20:47:17

Ok good *high five*

(my shortest post ever ![]() )

)

#44 Re: Maths Is Fun - Suggestions and Comments » Four In A Line Flash Version » 2007-04-13 20:39:57

Are you exaggerating on that 6 to 7 minutes? It would pause noticeably for some seconds for me, but definitely not over a minute or even half a minute. That's outrageous.

#45 Re: Maths Is Fun - Suggestions and Comments » Four In A Line Flash Version » 2007-04-13 18:39:33

This shouldn't be an issue if you play the game normally, but if you click "new game" when a piece is falling sometimes it will freeze in the slot it is in and float in midair. When you try to put a piece in that column the next game your piece will go where that old one is hovering. I've only gotten it to do this a couple times though, it doesn't happen every single time. You can use this bug to give the computer an extra turn at the start, but it doesn't really make the game harder.

I just used this... clicked new game at a special time while the computer's piece was falling.

#46 Re: Maths Is Fun - Suggestions and Comments » Four In A Line Flash Version » 2007-04-13 17:30:35

No, this is cheating! ![]()

Look at all those pieces he put in before me!

#47 Re: Maths Is Fun - Suggestions and Comments » Four In A Line Flash Version » 2007-04-13 16:50:48

The computer seems to think really hard on five in a line. I was listening to music on my computer and his thinking would make it pause for a second, every time. Maybe it's just my computer.

#48 Re: Maths Is Fun - Suggestions and Comments » Minor Upgrade to Forum (April 07) » 2007-04-13 11:24:23

Earlier we discussed subforums, for forums such as the exercises, which would help organize them a little. Is this a possibility yet?

#49 Re: Maths Is Fun - Suggestions and Comments » Deep Grey is a sorry game » 2007-04-13 08:18:28

Wow, is this game really hard? I went to try it after reading this and won my first try.

Ok, I think I just found out the trick right away and that's how I won, he hasn't beat me yet. I just get it down to 5 boxes at the end and it's my turn... I pick 2, and whatever he picks I will win. I'm sure sometimes this won't be possible though.

Also, MathsIsFun, I found a bug with this game. Whenever you click "Choose One" or "Choose Two" you take squares, even if it isn't your turn. There's an easy way to win the game too ![]() .

.

#50 Re: Help Me ! » Check » 2007-04-12 16:01:31

To explain Ricky's statement a little more, note that

We cannot rearrange these terms into a[sup]k[/sup]b[sup]k[/sup] if a and b do not commute. Do you see this?